MLOps, AIOps, DataOps, ModelOps, and even DLOps. Are these buzzwords hitting your newsfeed? Yes or no, it is high time to get tuned for the latest updates in AI-powered business practices. Machine Learning Model Operationalization Management (MLOps) is a way to eliminate pain in the neck during the development process and delivering ML-powered software easier, not to mention the relieving of every team member’s life.

Let’s check if we are still on the same page while using principal terms. Disclaimer: DLOps is not about IT Operations for deep learning; while people continue googling this abbreviation, it has nothing to do with MLOps at all. Next, AIOps, the term coined by Gartner in 2017, refers to the application cognitive computing of AI & ML for optimizing IT Operations. Finally, DataOps and ModelOps stand for managing datasets and models and are part of the overall MLOps triple infinity chain Data-Model-Code.

While MLOps seems to be the ML plus DevOps principle at first glance, it still has its peculiarities to digest. We prepared this blog to provide you with a detailed overview of the MLOps practices and developed a list of the actionable steps to implement them in any team.

Per Forbes, the MLOps solutions market is about to reach $4 billion by 2025. Not surprisingly that data-driven insights are changing the landscape of every market’s verticals. Farming and agriculture stand as an illustration with AI’s value of 2,629 million in the US agricultural market projected for 2025, which is almost three times bigger than it was in 2020.

To illustrate the point, here are two critical rationales of ML’s success — it is the power to solve the perceptive and multi-parameters problems. ML models can practically provide a plethora of functionality, namely recommendation, classification, prediction, content generation, question answering, automation, fraud and anomaly detection, information extraction, and annotation.

MLOps is about managing all of these tasks. However, it also has its limitations, which we recommend to bear in mind while dealing with ML models production:

Meanwhile, MLOps is particularly useful when experimenting with the models undergoing an iterative approach. MLOps is ready to go through as many iterations as necessary as ML is experimental. It helps to find the right set of parameters and achieve replicable models. Any change in data versions, hyper-parameters, and code versions leads to the new deployable model versions that ensure experimentation.

Every ML project aims to build a statistical model out of the data, applying a machine learning algorithm. Hence, Data and ML Model come out as two different artifacts to the software development of the Code Engineering part. In general, ML Lifecycle consists of three elements:

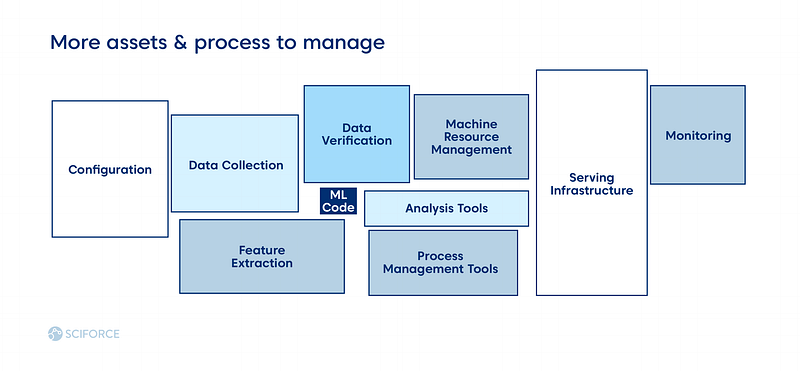

As ML introduces two extra elements into the software development lifecycle, everything becomes more complicated than the use of DevOps for any software development. While MLOps still seeks source control, unit and integration testing, and continuous delivery of the package, it brings some new differences, compared to DevOps:

The level of each step of data engineering automation, model engineering, and deployment defines the overall maturity of MLOps. Ideally, CI and CD pipeline should be automated to define the mature MLOps system. Hence, there are three levels of MLOps, categorized and based on the level of processes automation:

In contrast to DevOps, model reuse is a different story as it needs manipulations with data and scenarios, unlike software reuse. As the model decays over time, there is a need for model retraining. In general, data and model versioning is “code versioning” in MLOps, which seeks more effort compared to DevOps.

To think through the MLOps hybrid approach for a team, that is implementing it, one needs to assess the possible outcomes. Hence, we’ve developed a generalized pros-and-cons list, which may not apply to every scenario.

We assume that it might take some time for any team to adapt to the MLOps and develop its modus operandi. Hence, we are proposing a list of possible “stumbling stones” to foresee:

MLOps requires knowledge about data biases and needs high discipline within the organization, which decides to implement it.

As a result, every company should develop its own set of practices to adjust MLOps to its development and automation of the AI force. We hope that the guidelines mentioned contribute to the smooth adoption of this philosophy into your team.