1. Instant Information Retrieval The system was required to deliver accurate answers within 2 seconds, even during high traffic. This involved optimizing response times, efficiently processing large datasets, and supporting multiple simultaneous queries without compromising performance, thereby reducing user reliance on live consultants during peak times.

2. Integration with Existing Systems The chatbot needed seamless integration with the company's knowledge base and IT infrastructure, connecting to databases with presentations, PDFs, and FAQs while ensuring compatibility with internal systems for smooth operation.

3. Handling Diverse Query Types Clients in the financial industry often have complex questions about product features and industry-specific solutions. The chatbot required advanced natural language processing to ensure accurate and relevant responses to these diverse queries.

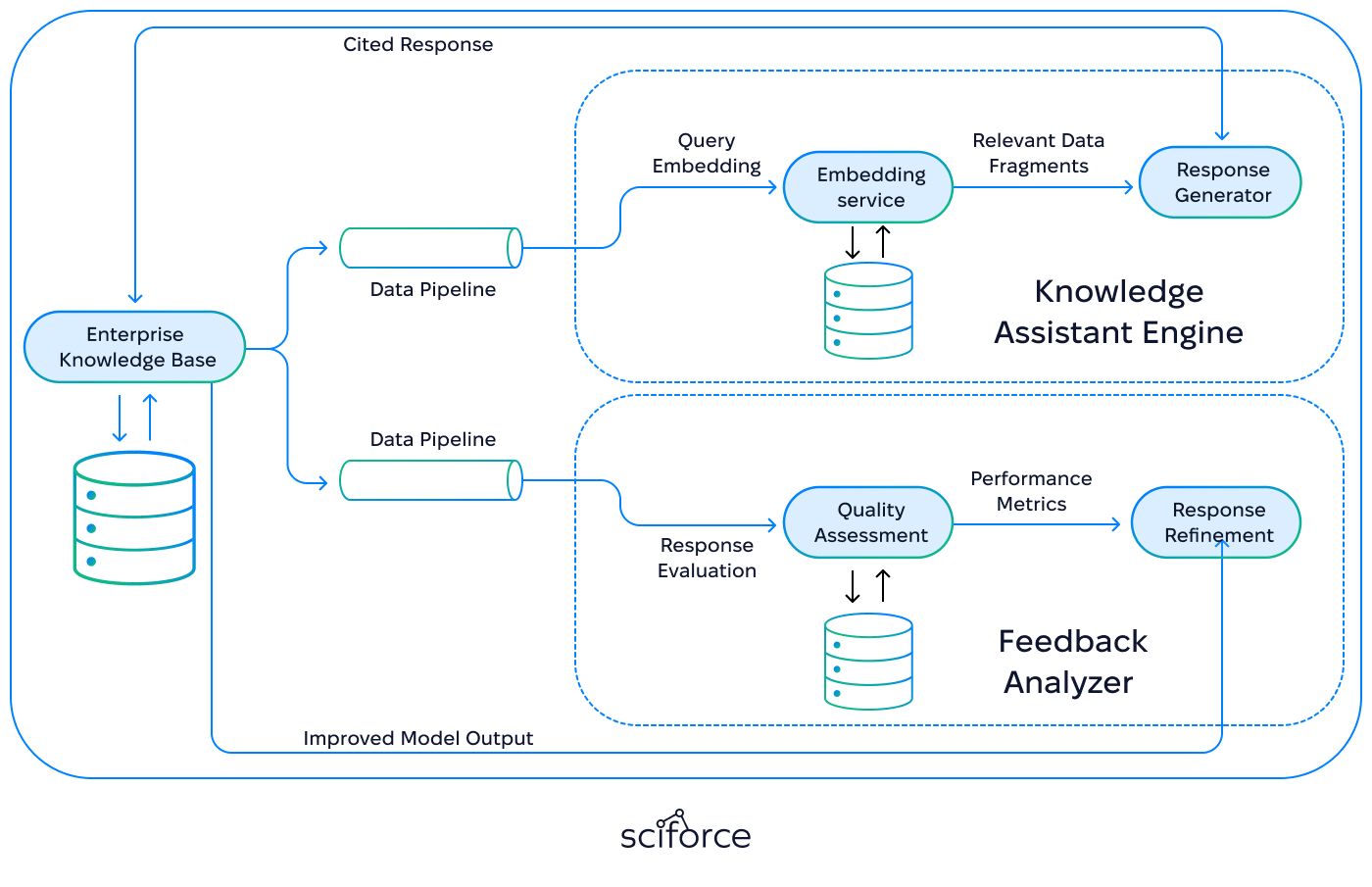

4. Monitoring and Improving Response Quality Maintaining high response quality required continuous evaluation, monitoring the accuracy and relevance of responses, analyzing user feedback, and refining the system based on performance insights.

5. Evaluation of Generative AI Responses Evaluating AI-generated responses was challenging, as traditional metrics couldn’t assess semantic relevance. A specialized approach with embedding-based similarity metrics and a strong "Ground Truth" dataset was essential for consistent evaluation and quality improvement.

1. Data Processing and Storage The system processes content like presentations, PDFs, and website text by dividing it into token-based chunks and converting each into numerical embeddings. These embeddings, along with metadata like document source, are stored in a vector database. User queries are also converted into embeddings and compared using cosine similarity, enabling quick and accurate retrieval of relevant fragments.

2. AI Model Integration OpenAI GPT-4O-Mini was chosen for its high performance and cost efficiency. We considered other models, but dismissed LLAMA due to lower response quality and Anthropic due to higher costs. The model is integrated via API and works with the vector database to handle user queries. Queries are converted into embeddings, matched with the most relevant data fragments, and processed by the model. By focusing only on these fragments instead of the entire dataset, the model generates accurate and relevant responses

3. Microservice Architecture The chatbot is implemented as a standalone microservice, making it highly flexible for integration into various systems and infrastructures. Its API design allows for reuse in different environments, further enhancing its adaptability. The architecture prioritizes high performance and scalability, enabling the system to handle multiple simultaneous queries without compromising speed or accuracy, even during heavy traffic.

4. Metadata and Insights The system collects and provides detailed metadata, such as document sources, page numbers, content types, and timestamps, in a structured JSON format. This metadata is primarily used for backend analysis to monitor system performance, evaluate response relevance, and refine the chatbot’s accuracy over time. While not directly useful to end-users, it serves as a critical tool for ongoing system improvement and quality assurance.

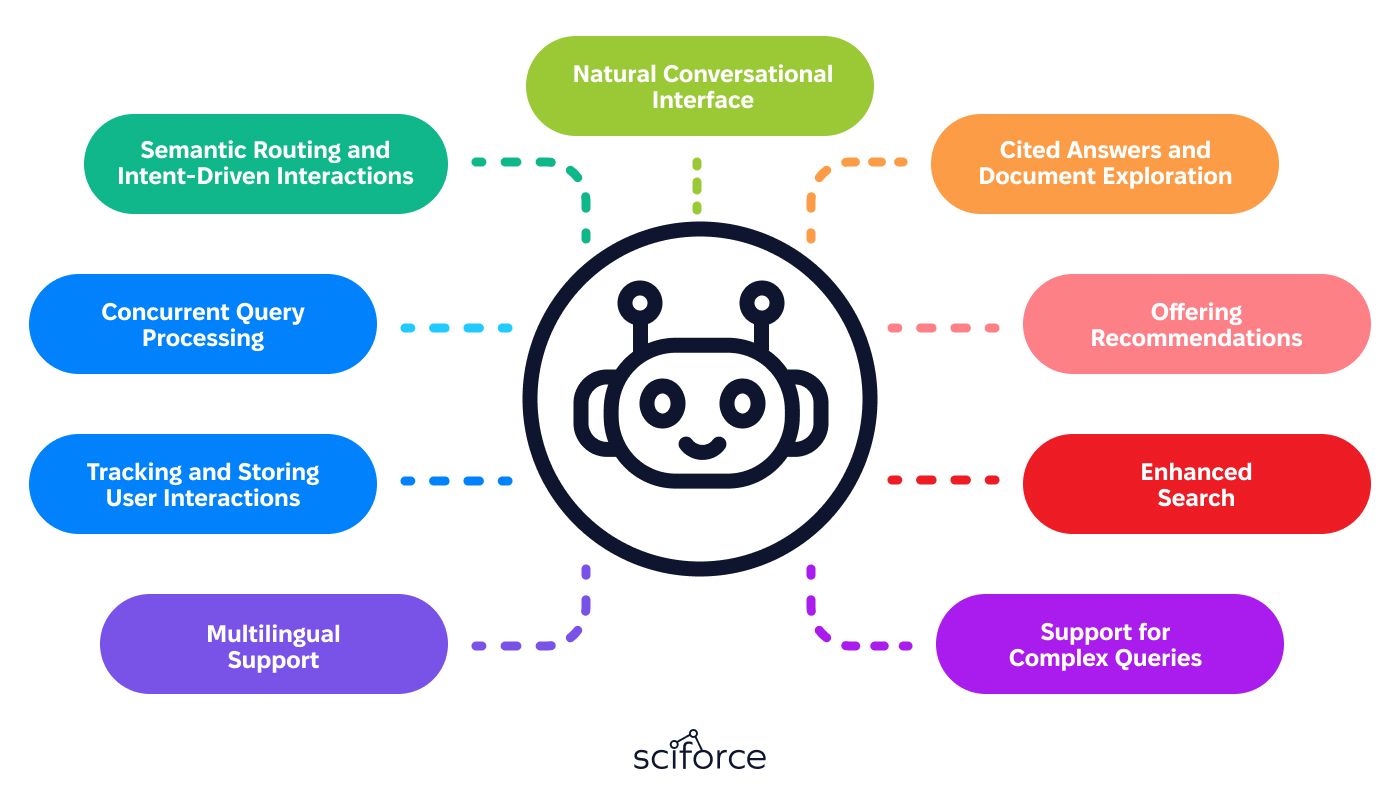

1. Natural Conversational Interface The Knowledge Assistant provides a user-friendly conversational interface, enabling natural language interactions. Users can ask questions as if conversing with a human consultant, avoiding rigid menu-based interfaces and enhancing accessibility for non-technical users.

2. Cited Answers and Document Exploration The system provides concise, cited answers directly from source documentation, ensuring accuracy and reliability. Additionally, it enables users to explore underlying materials such as presentations, PDFs, and website content in more detail, empowering them to make informed decisions.

3. Offering Recommendations Beyond delivering information, the Knowledge Assistant analyzes user intent and offers context-aware recommendations, suggesting relevant products, services, or actions based on user queries. This feature enhances customer engagement by proactively addressing user needs and guiding them to suitable solutions.

4. Enhanced Search Users no longer need to sift through multiple articles manually. The system accelerates learning and improves search accuracy by breaking down articles into smaller, easily searchable fragments.

5. Support for Complex Queries The system is equipped to handle detailed and industry-specific questions, particularly in finance and ERP solutions. Its advanced natural language processing ensures accurate and contextually relevant responses, tailored to complex user inquiries.

6. Multilingual Support Multilingual Support breaks down language barriers by enabling the system to understand, process, and respond to queries in over 100 languages, making the customer service accessible to a wider audience.

7. Tracking and Storing User Interactions The Knowledge Assistant records user interactions, including queries, responses, and metadata. While primarily for backend analysis, this data helps refine system performance, improve response accuracy, and provide insights into user behavior and needs.

8. Concurrent Query Processing Concurrent query processing enables the chatbot to handle multiple user queries simultaneously without compromising performance. This feature ensures that users receive timely and accurate responses, even during peak usage periods.

9. Semantic Routing and Intent-Driven Interactions The system employs semantic routing to determine the intent behind user queries, allowing for more accurate and context-aware responses. By analyzing the meaning and context of a user's request, the Knowledge Assistant can route queries to the appropriate resources or responses.

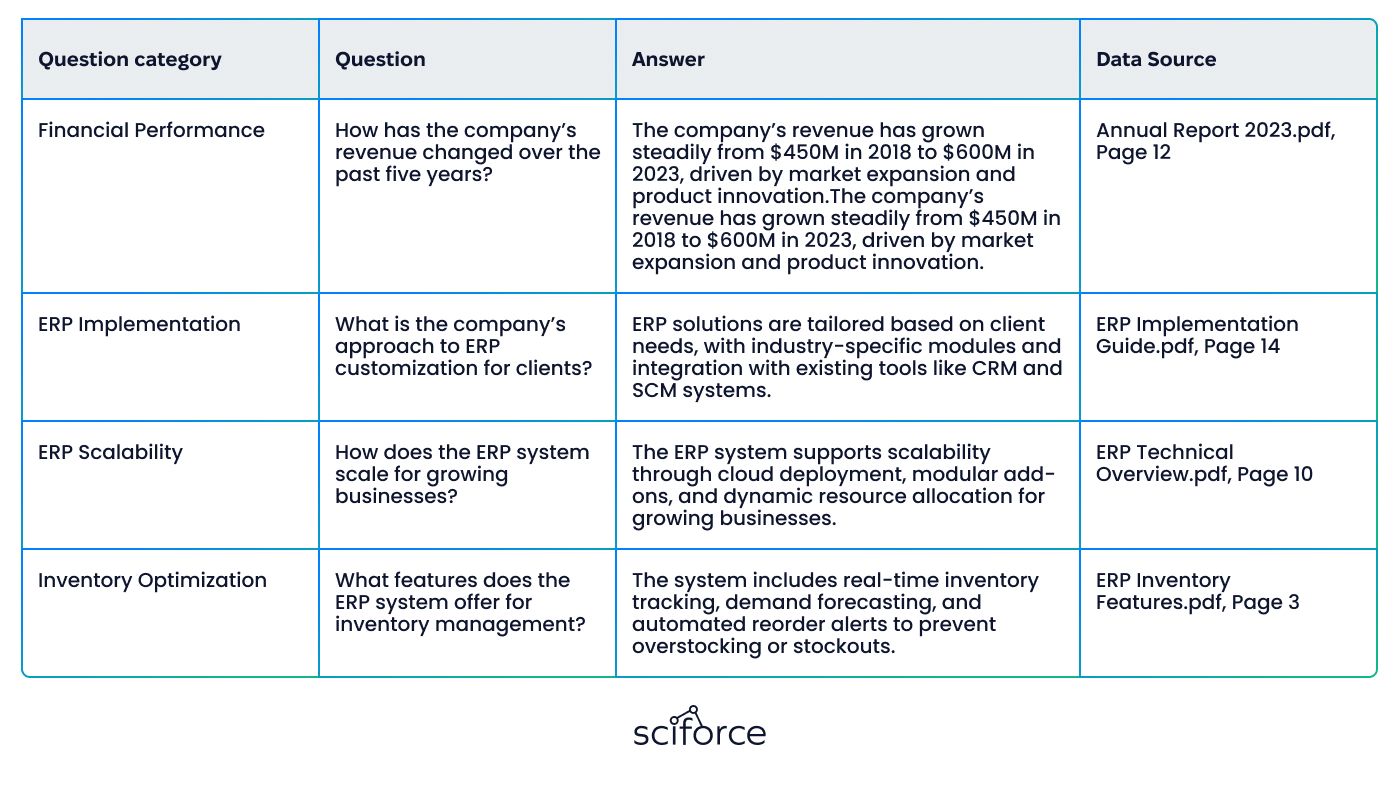

1. Data Analysis and Creation of the Test Set

The first stage involved analyzing the types of data to be processed, such as text, PDFs, and website content. A Ground Truth test set was then created, consisting of approximately 100 example questions and corresponding answers. These answers served as a benchmark for evaluating the quality of the model’s responses. This stage ensured the availability of high-quality data for future evaluations and performance measurement.

1. Data Analysis and Creation of the Test Set

The first stage involved analyzing the types of data to be processed, such as text, PDFs, and website content. A Ground Truth test set was then created, consisting of approximately 100 example questions and corresponding answers. These answers served as a benchmark for evaluating the quality of the model’s responses. This stage ensured the availability of high-quality data for future evaluations and performance measurement.

2. Development During development, the service architecture was established using FastAPI for its modern and efficient API framework. Key steps included:

Once the infrastructure was established, the system underwent evaluation using a Ground Truth test set, consisting of sample questions and expected answers. We employed an Embedding-Based Similarity metric to measure how closely the generated responses aligned with the expected answers. This approach enabled precise assessment of response accuracy, guiding further refinements to enhance the Knowledge Assistant’s performance.

3. Deployment Stage After successful evaluation and optimization, the Knowledge Assistant is ready for production deployment. We created Docker files, packaging the application for container use, and ensuring consistent performance across environments.

Key deployment activities include finalizing API settings, establishing the API contract, conducting live system testing, and making final infrastructure adjustments. The flexible deployment strategy, allowing for containerized or cloud-based implementation, aims to ensure a smooth and reliable launch of the Knowledge Assistant system with minimal operational challenges.

4. Monitoring After deployment, the system undergoes periodic monitoring to assess its performance. This process is flexible and may involve consulting experts for a thorough evaluation. The results of the monitoring are analyzed, allowing for necessary adjustments and refinements to enhance system performance. Additionally, the system tracked key metrics, such as response times and accuracy rates, for continuous improvement.

Specialists periodically assess the quality of responses generated by the Knowledge Assistant model. Based on these evaluations, necessary adjustments are made to continually enhance the accuracy and quality of the responses.

- Result The implementation of the Knowledge Assistant delivered measurable improvements across key business metrics:

- Instant Response The chatbot achieved response times of under 2 seconds, ensuring users received immediate and accurate answers. This enhancement significantly improved customer satisfaction by eliminating delays.

- Cost Optimization Automating repetitive inquiries reduced the need for live consultants, resulting in a 25% reduction in support operational costs. The use of the cost-efficient OpenAI GPT-4o-mini model further minimized expenses while maintaining high-quality responses.

- Sales Team Automation The Knowledge Assistant autonomously handled 78% of all customer queries, reducing the workload of sales and support teams. This allowed staff to concentrate on high-value tasks, such as lead management and personalized support for complex cases.

Discover how an AI Knowledge Assistant can optimize response times, reduce costs, and automate inquiries. Book a consultation today to see how AI can elevate your ERP support experience.