Client Profile

Our client is an international company in the educational technology sector, offering a language learning app. This app supports pronunciation training in over a hundred languages, many of which are not typically addressed by other platforms. It stands out for addressing specific learning challenges like inadequate data for non-English languages, varying accents, and noisy environments.

The company aims to break down linguistic barriers and democratize language education globally, providing an effective and user-friendly tool that helps learners from diverse backgrounds achieve fluency in their chosen languages, enhancing their global communication skills.

Additionally, the application focuses on preserving endangered languages by using specialized models and datasets. By providing the opportunity to learn these languages to anyone, it helps protect languages at risk of disappearing due to a lack of resources and speakers. This ensures they are kept alive for future generations, supporting cultural diversity and heritage.

Our main task was to develop a language learning application capable of serving learners across a broad spectrum of languages. The primary challenge was ensuring accurate and effective speech recognition for over a hundred languages, each with unique accents and pronunciation variations. Additionally, the application's focus on rare and endangered languages posed additional challenges of limited data and the need for specialized models to accurately recognize and process these languages.

1. Limited Datasets

There were significant disparities in the availability of datasets across different languages and phrases, making it difficult to build a comprehensive speech recognition model.

2. Accents and Pronunciation Variations

Language learners exhibit a wide range of accents and pronunciation variations, which poses a challenge for accurate speech recognition and pronunciation training.

3. Speech Anomalies

Managing hesitations, repetitions, and other speech anomalies while accurately highlighting pronunciation errors encountered during the learning process was essential to improve the learning experience.

4. Diverse User Environments

The application needed to function effectively in various user environments, which included background noise and voice variations, complicating the task of accurate speech recognition.

5. Continuous Model Refinement

There was a constant need to refine the models and expand datasets to accommodate new learning dynamics and ensure the application remained effective and up-to-date with evolving language learning requirements.

6. Supporting Endangered Languages

Preserving endangered languages required overcoming the scarcity of linguistic data and developing highly specialized models. These models needed to be trained on limited available data while ensuring high accuracy in recognizing and processing speech patterns unique to these languages.

To address the challenges, we implemented the following solutions:

1. Comprehensive ASR System

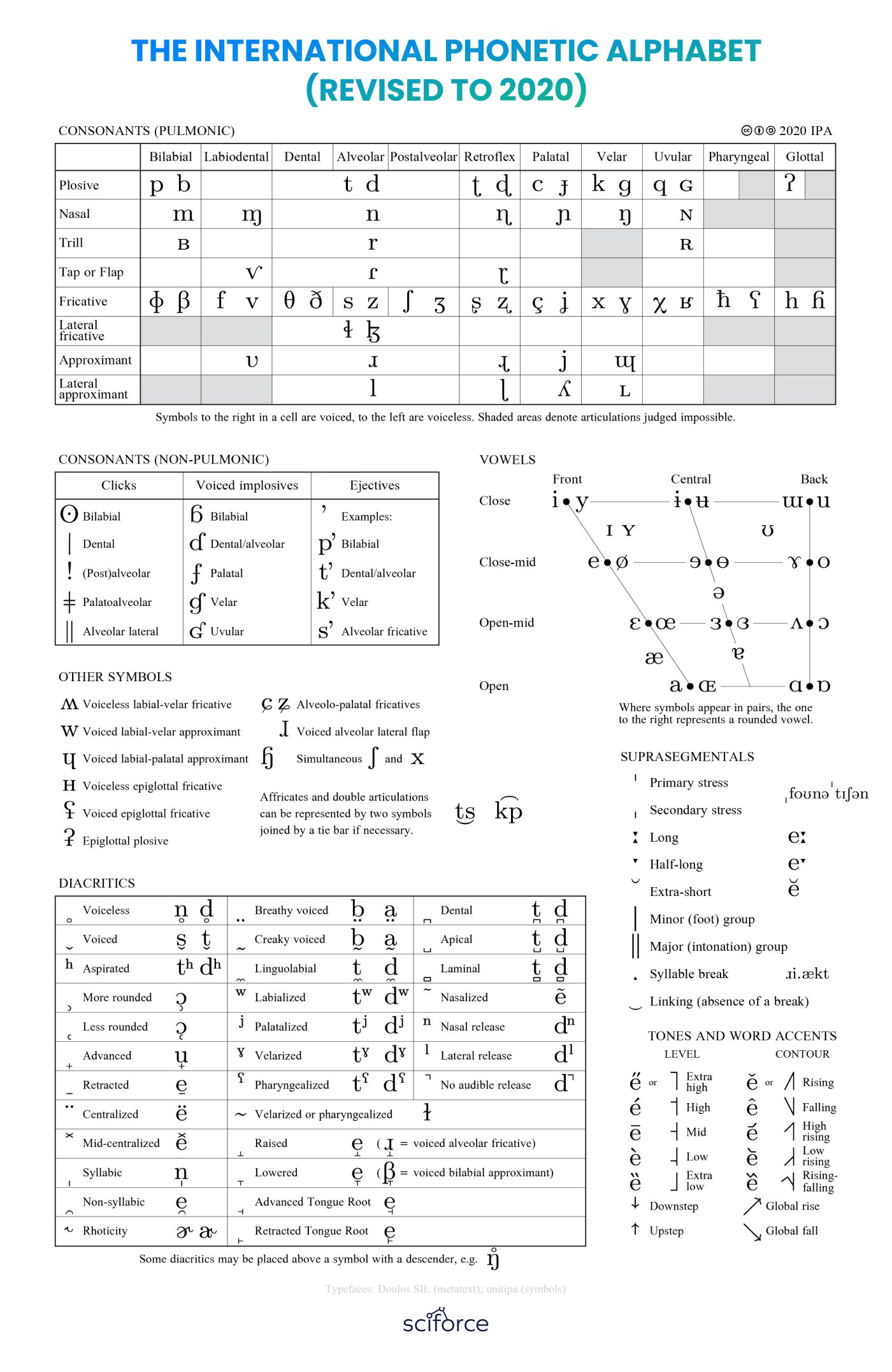

We transitioned to a TensorFlow-based end-to-end ASR system. This approach allowed us to directly map acoustic features to phonemes using the International Phonetic Alphabet (IPA), improving speech recognition accuracy across over a hundred languages.

2. Language-Specific Tags

We incorporated language-specific tags within the phoneme sequences to account for unique accents and pronunciation variations. This customization enabled the application to accurately recognize and adapt to the phonetic characteristics of each language.

3. Integrated Datasets

We leveraged the International Phonetic Alphabet (IPA) phonemes and language-specific tags to combine various datasets, enabling us to create a comprehensive multilingual model. This approach allowed us to train the ASR system on all available data collectively, achieving better results than using separate, smaller datasets and models for each language. By integrating datasets in this way, we enhanced the system's ability to recognize and process speech across a wide range of languages, providing more accurate and effective speech recognition.

We used open-source tools like Epitran to generate phonemic transcriptions from textual transcriptions of audio datasets. This tool helped convert audio data into phonemes, facilitating the integration of diverse linguistic data into a unified model. This method significantly improved the coverage and quality of our speech recognition system for less commonly taught languages.

4. Noise Robustness

Our ASR system was designed to function effectively in diverse user environments. We implemented noise reduction and data augmentation techniques to maintain high accuracy even in noisy conditions.

5. Continuous Model Refinement

We established a process for ongoing refinement and expansion of our models and datasets. This ensures that the application remains effective and adapts to new learning dynamics, accommodating evolving user needs and language variations.

1. Initial Research and Planning

We evaluated the limitations of existing ASR models in recognizing diverse languages and accents. Identifying the need for a flexible solution, we chose TensorFlow for an end-to-end ASR system. A feasibility study was conducted to integrate the International Phonetic Alphabet (IPA) for standardized phoneme representation and improved accuracy.

2. System Design and Transition

We transitioned from Matlab-based ASR models to a more advanced TensorFlow-based end-to-end ASR system. This involved developing a new framework to map acoustic features directly to phonemes using the International Phonetic Alphabet (IPA). To address phonetic inconsistencies across different languages, we incorporated language-specific tags into the phoneme sequences, ensuring accurate and tailored speech recognition for each language.

3. Dataset Expansion and Model Training

We expanded our datasets using open-source tools like Epitran, which we modified to meet the specific needs of our project. These enhanced datasets were used to train models capable of supporting over a hundred languages. Our focus was on creating robust pronunciation models tailored to the unique phonetic characteristics of each language.

4. Implementation and Testing

We integrated the TensorFlow-based ASR system into the language learning application. Rigorous testing was conducted in various user environments to ensure the system's functionality under different noise conditions, such as public places and noisy households. Based on feedback and test results, we iteratively refined the models to enhance accuracy and performance.

5. Deployment and Continuous Improvement

We deployed the language learning application globally. To adapt to new learning dynamics and user feedback, we established a process for continuous model refinement and dataset expansion, regularly updating language data and pronunciation models to maintain high accuracy and user satisfaction.

When developing our ASR solution, we evaluated several modern model architectures, including Transducer-based ASR, CTC, and Attention-based Encoder-Decoder (AED models. We found that the Transformer-based AED model provided the best performance for our needs. Additionally, the attention matrices from this model allowed us to precisely identify where misrecognitions occurred, helping students see which pronunciations they need to improve.

To address the challenges, we implemented several key features in our language learning application:

1. End-to-End ASR System with TensorFlow

We transitioned from conventional Matlab-based ASR models to a more dynamic and modern end-to-end ASR system developed with TensorFlow. This allowed us to directly map acoustic features to phonemes using the International Phonetic Alphabet (IPA).

2. Utilization of the International Phonetic Alphabet (IPA)

The IPA served as the cornerstone of our solution, enabling the system to recognize a wide range of languages. Despite the IPA's inconsistency across languages, we incorporated language-specific tags within the phoneme sequences to address this issue. For example, "hello" in American English is transcribed as "<en-us> hɛˈloʊ," while the Latvian "sveiki" is "<lv> ˈs̪vɛi̯ki," and the Polish "cześć" is "<pl> ʧ̑ɛɕʨ̑."

During the recognition process, we determine the target language and add the appropriate tag to the model's input. This enables the system to produce accurate transcriptions tailored to each language's phonetic characteristics.

4. IPA Phonemes Recognition

An end-to-end ASR system can directly map sounds to written characters or parts of words (graphemes or wordpieces). However, this becomes complicated when dealing with many languages that have different alphabets and writing systems.

Given the significant differences in alphabets across languages, we needed a more complex approach than using a single alphabet or set of wordpieces. The International Phonetic Alphabet (IPA) was very useful for this. However, to use the IPA effectively, we needed a pronunciation model. This model provided the necessary phonemic transcriptions, allowing us to handle the complexities of multiple languages.

5. Pronunciation Models

Incorporating multiple pronunciation models tailored for each language was crucial. We employed open-source tools like Epitran, modified to meet the specific needs of our project, ensuring accurate phonemic transcriptions necessary for the multilingual environment.

6. Focus on Language Proficiency Improvement

Our application was designed to help students learn and improve their language proficiency. Instead of automatically correcting student errors using a language model, which could be counterproductive, the system highlights errors and guides learners towards self-correction.

1. Global Reach

The application now supports over 100 languages, increasing our user base to over 1 million users across 150 countries. This expanded reach has opened new markets and driven a 30% increase in global subscriptions.

2. Improved User Experience

Enhanced user experience has led to a 40% increase in user engagement, with session durations up by 30%. Pronunciation accuracy improvements have contributed to a 25% increase in user retention rates, reducing churn and boosting lifetime value.

3. Efficient Development and Cost-Effectiveness

Leveraging TensorFlow reduced development costs by 20%, as it eliminated the need for large, separate datasets for each language. This efficiency allowed us to release updates 50% faster, enhancing competitive edge and responsiveness to market needs.

4. Affordability

The optimized development approach and resulting cost savings enabled us to reduce subscription costs by 15%, attracting a broader customer base. This pricing strategy, coupled with increased user retention, has driven a 25% increase in annual revenue.

5. Enhanced Learning Outcomes

Providing precise feedback on pronunciation has led to a 35% improvement in learners’ pronunciation scores within six months. This success has resulted in a higher customer satisfaction rate, with positive reviews and recommendations contributing to a 20% increase in referral-based sign-ups.

We've updated a language learning app, empowering millions of users with pronunciation training in over a hundred languages