We partnered with a B2B company from the EdTech sector. Their product is a platform where companies can upload their courses and training for corporate education in any business areas. Initially, their infrastructure was hosted on AWS servers in Ireland.

Their customer from Germany requested transferring the infrastructure used for storing their educational products from Ireland to Germany.

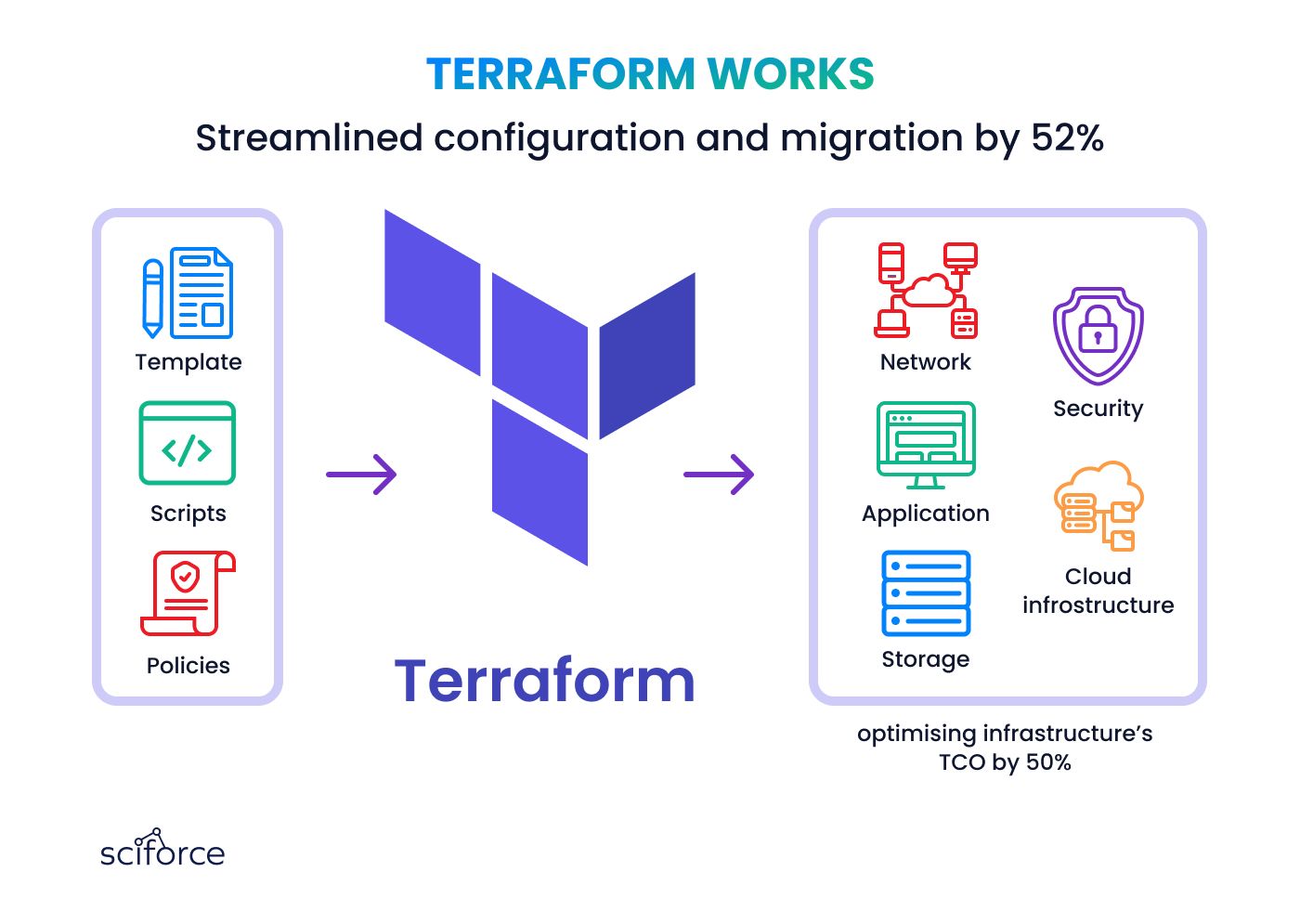

We needed to perform the migration ensuring that it doesn’t affect the functionality of the client's current operations. Additionally, we needed to describe the production environment on Terraform and optimize some legacy systems.

The main task of this project was to move an extensive AWS infrastructure from Ireland to Frankfurt, Germany, while complying with strict German data protection laws, requiring that personal data be stored only on servers located within Germany.

Regulatory Compliance

We had to ensure that all personal data complied with German laws, preventing simpler solutions like data mirroring across regions or keeping some infrastructure components outside Germany.

Technical Execution

The migration involved more than just moving between AWS data centers. It required detailed planning to move virtual servers, databases, domains, and names that were interconnected and not easily movable. It was critical to maintain continuous operation with minimal downtime or performance issues.

Infrastructure Management

Much of the staging infrastructure was managed using Terraform, which covered about 90 to 95% of the resources. However, the production environment was not fully described in Terraform, necessitating manual adjustments via the AWS console.

Latency Issues

Initial attempts to connect a new regional instance to the old database caused significant latency issues, temporarily reducing system efficiency. This situation underscored the importance of having servers and databases in close proximity to ensure optimal performance.

Legacy Systems

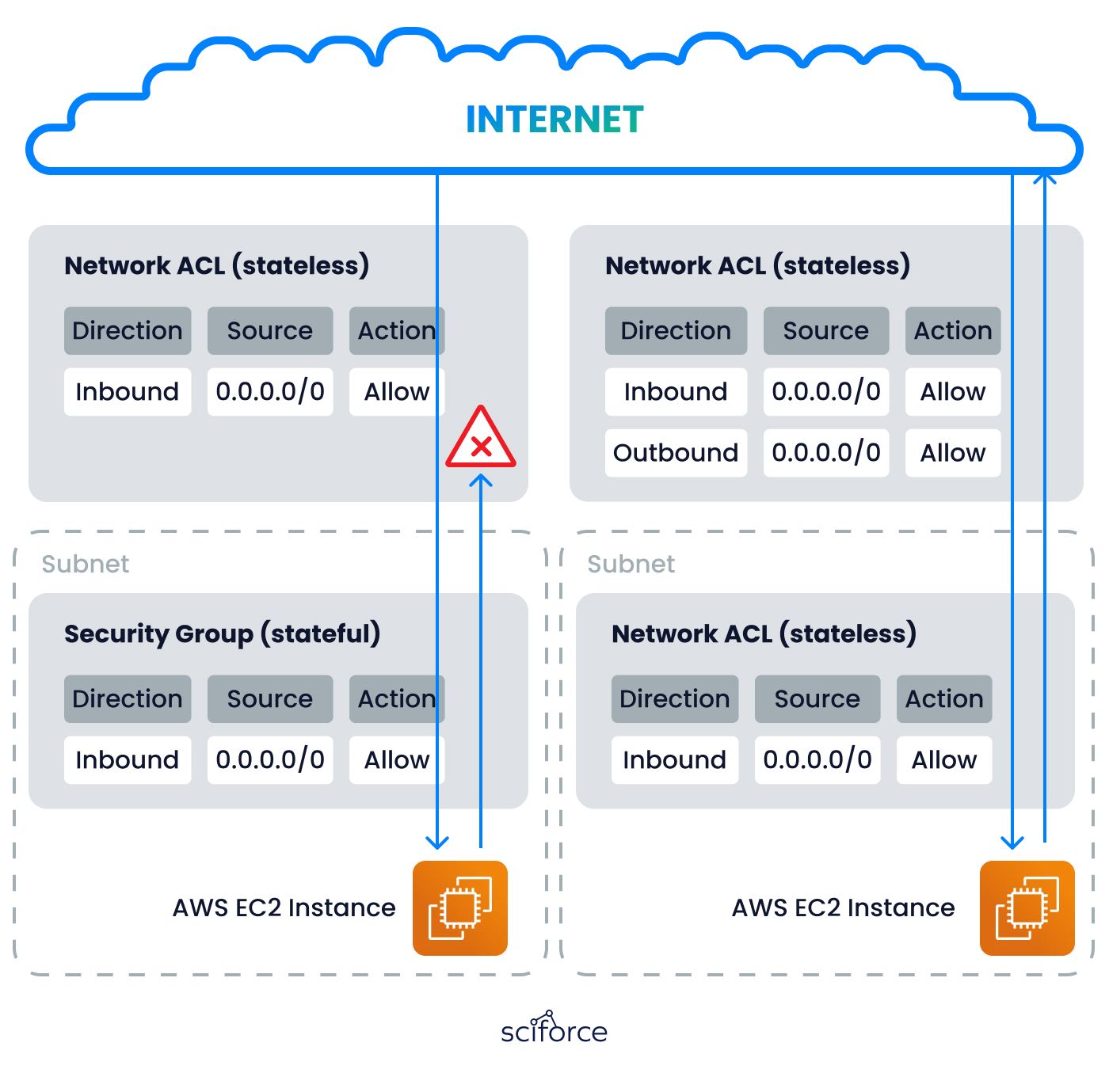

Another key challenge was that the project contained a number of legacy systems created by former employees: security groups, networks, subnets, servers whose purpose is unknown. Their purpose was unknown for now, but they were charging costs and affecting performance. Our task was to structure them, disable the unnecessary resources and modernize relevant ones.

Terraform Automation

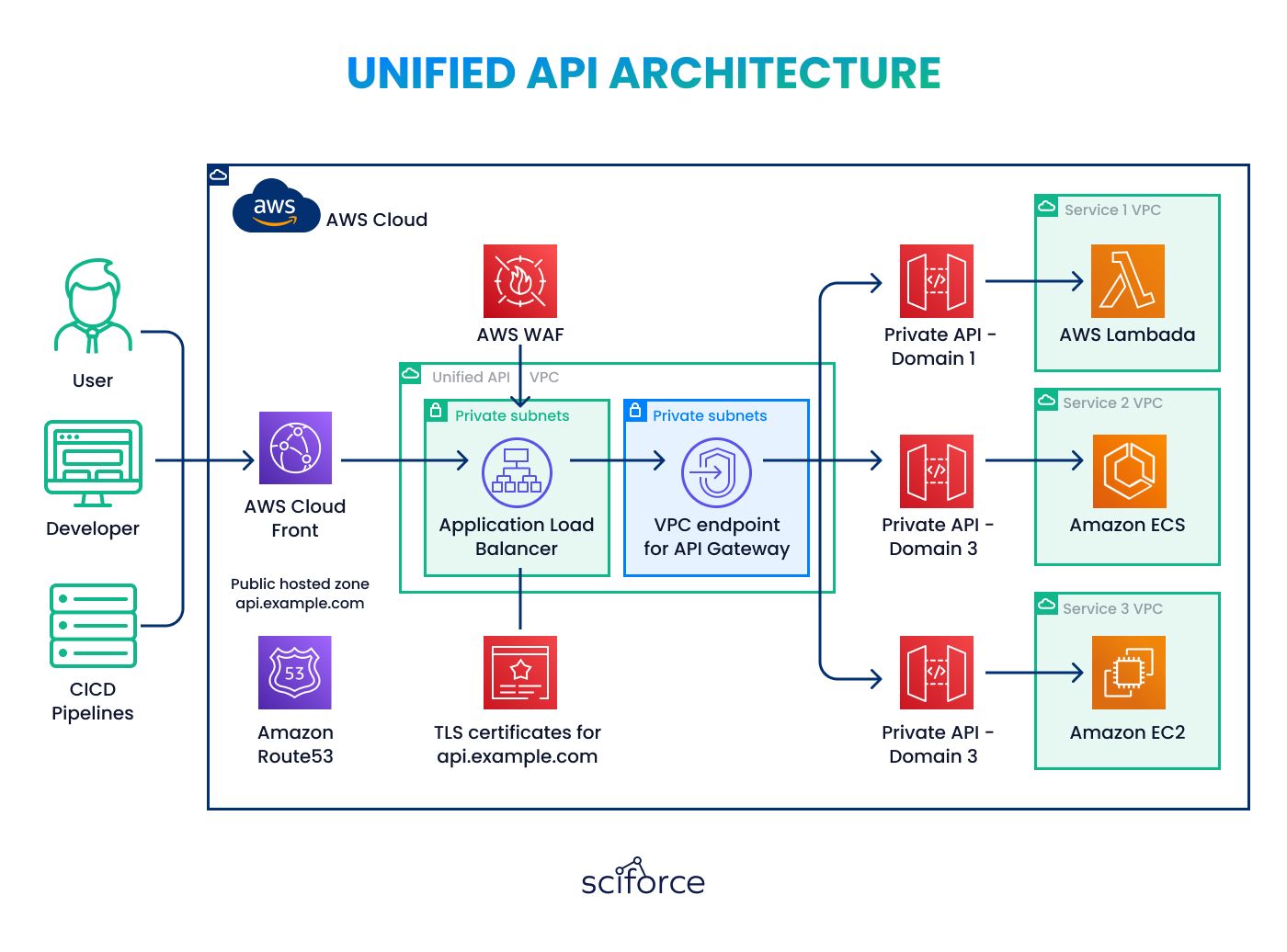

We used Terraform to automate the deployment and management of our networked virtual machines, shared databases, and load balancers across production and staging environments. This approach ensured a standardized setup and reduced the risk of human errors during the migration process.

Efficient Database Migration

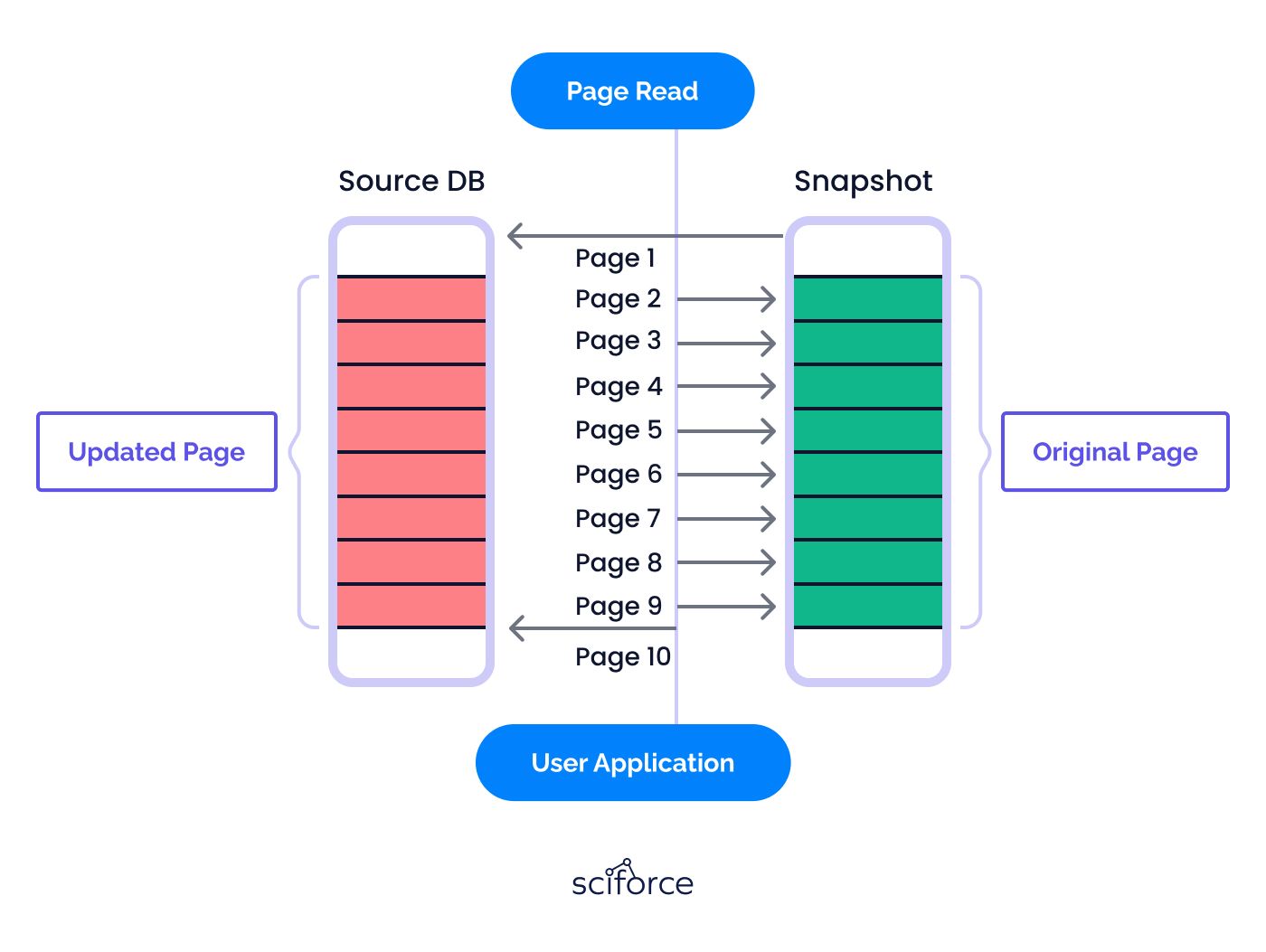

To keep data integrity and reduce downtime, we created a snapshot before transferring the data to the new region. At the same time, we ran scripts to set up the required instances and smoothly merge the snapshot into the live environment, without disrupting ongoing operations.

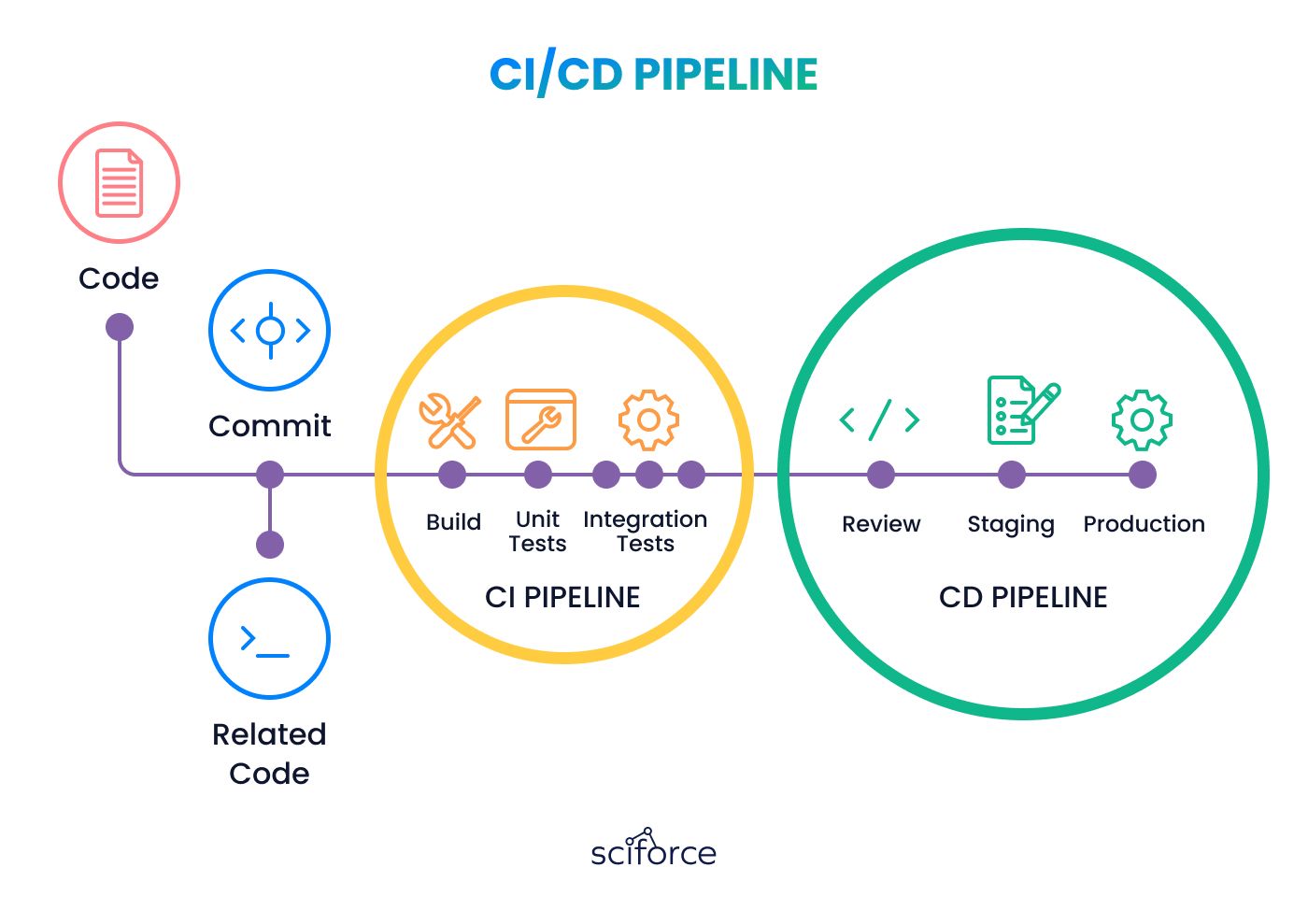

CI/CD with Semaphore

Integrated the Semaphore CI/CD platform to continuously push updates and ensure system stability without manual intervention. Semaphore also facilitated the real-time testing deployment of backend and frontend applications updates, ensuring that the system remained stable and reliable throughout the migration.

The development process for the AWS infrastructure migration project involved detailed planning and execution across several technical fronts:

Infrastructure Configuration and Migration

We began by configuring a network of AWS virtual machines, linked by shared services and databases, primarily managed via Terraform. The critical migration effort focused on moving a complex database system and multiple domains from Ireland to Germany, requiring precise coordination to ensure minimal operational disruption.

Database Snapshot and Transfer

A significant part of the migration involved creating a snapshot of the existing database and transferring it to the new region. This task was handled by a DevOps technician who ensured that the snapshot was successfully transferred and integrated without data loss or corruption. The process also involved setting up new database instances in the new region and ensuring they were fully synchronized with the existing data structures.

Code Adaptation and Deployment

Our development team undertook significant coding efforts to adapt both backend (Ruby) and frontend (JavaScript) components to the new regional specifications and updated AWS configurations. One major challenge was addressing significant latency during the initial trials, which involved connecting a newly created AWS instance in the new region to the old database. The temporary solution was to operate this setup overnight, which highlighted unsustainable delays, leading to a strategy adjustment.

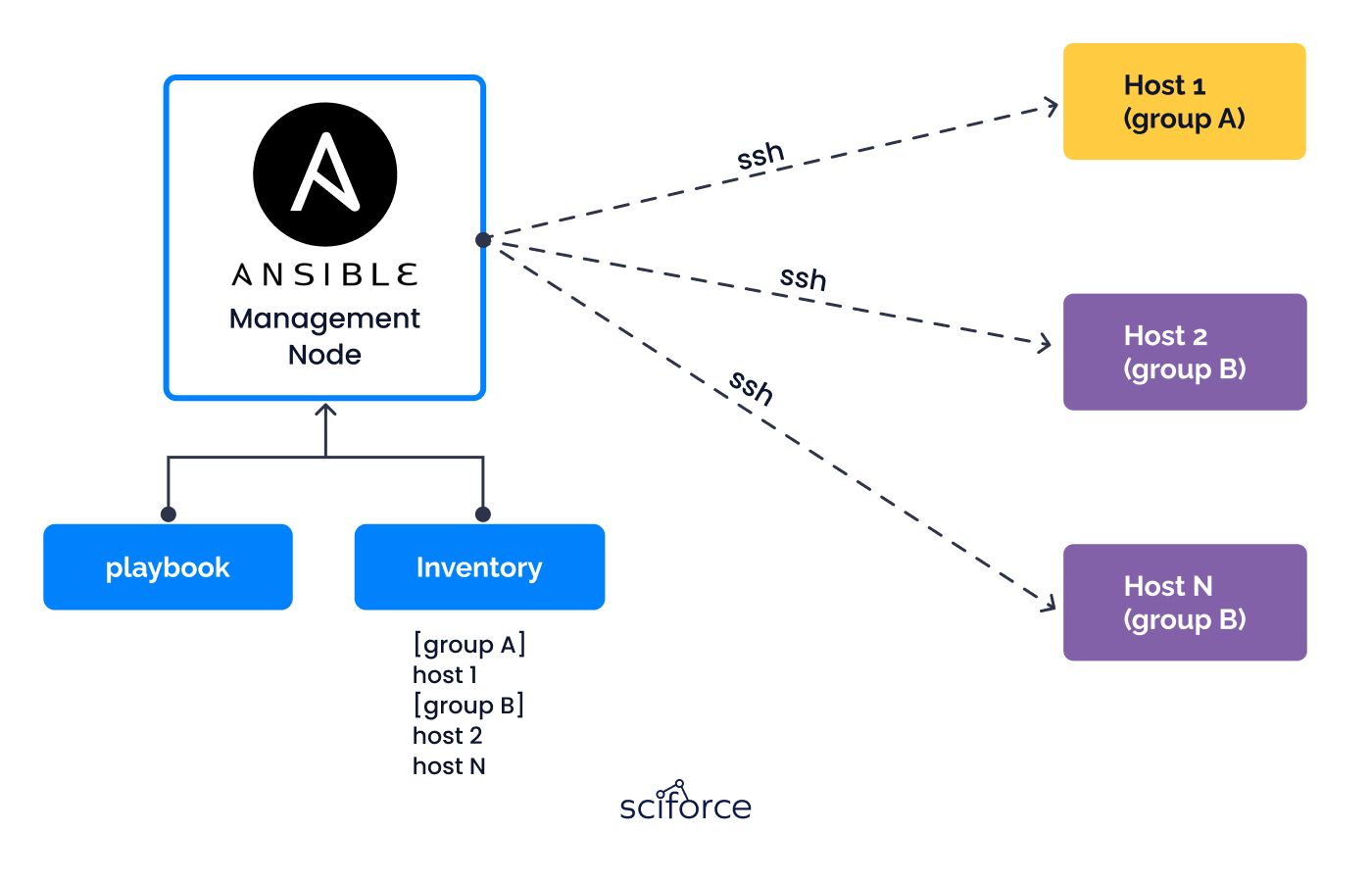

Ansible Automation for Virtual Machine Setup

Automation played a pivotal role, with Ansible used to streamline the setup and configuration of new virtual machines. Specific tasks included installing essential applications, deploying code agents, and configuring web servers like Nginx. The production setup expanded from four to five virtual machines, managed through Ansible playbooks that automated the installation and configuration processes for various service groups.

Continuous Integration and Continuous Deployment (CI-CD)

We implemented the Semaphore CI-CD system to manage ongoing updates and feature deployments seamlessly. This system was crucial for integrating new code updates into the production environment automatically, facilitating a smooth workflow that minimized manual interventions and errors.

AWS Services

The backbone of the infrastructure was Amazon Web Services (AWS), which provided the necessary cloud resources including EC2 for virtual servers, RDS for managed database services, and Route 53 for DNS management.

Terraform

We leveraged Terraform for infrastructure as code (IaC) to ensure consistent and reproducible setups across environments. Terraform was crucial for defining and deploying the entire cloud infrastructure, including network configurations, virtual machines, and database instances. This tool also allowed for version control of our infrastructure changes, enhancing the maintainability and traceability of the deployment process.

Ruby and JavaScript

The application stack consisted of a Ruby on Rails backend and a React-based frontend. Ruby on Rails was chosen for its robustness and mature ecosystem, making it ideal for building reliable and secure backend services. React’s flexibility and component-based architecture allowed for an interactive and dynamic user interface on the frontend.

Ansible

For configuration management, Ansible was used to automate the setup of virtual machines, ensuring that all servers were configured consistently and according to best practices. Ansible playbooks defined the automation of tasks such as software installations, system configurations, and service deployments, significantly reducing manual overhead and the potential for human errors.

Semaphore CI/CD

We implemented Semaphore as our continuous integration and deployment tool to automate the testing and deployment phases of our development cycle. Semaphore provided a less known but highly effective platform for managing build pipelines, ensuring that new code revisions underwent proper testing before being deployed to production environments.

Monitoring and Security Tools

We set up thorough monitoring systems to keep an eye on the infrastructure's health and efficiency. Using tools like AWS CloudWatch and tailored logging methods, we gained immediate insights into how well the applications and systems were running. For security, we enhanced protection with AWS security groups, network access control lists (NACLs), and identity and access management (IAM) policies, ensuring all resource access was securely managed and reviewed.

Operational Continuity

During the migration, we kept the application running smoothly without noticeable downtime. We did this by transferring data in stages, starting with non-critical data and gradually including more essential parts. Comparisons of performance before and after the migration showed a noticeable drop in system latency (from 120ms to 75ms) and faster processing speeds.

Data Integrity and Security

We successfully transferred all data without any loss. We created a complete snapshot of the database and moved it to the new region safely. Adjustments were made to the infrastructure to accept the new data securely, upholding Germany's strict security standards.

System Performance and Reliability

After the migration, system performance improved thanks to better allocation of resources and reduced latency since the servers and databases were closer to our primary user base in Germany. We also removed outdated and unnecessary resources, which led to 40% faster data retrieval time and a 30% quicker server response.

Cost Optimization

By optimizing server usage and automating processes, we decreased manual management and operational expenses. The migration reduced the total cost of ownership (TCO) by approximately 20%. This decrease was achieved through the elimination of outdated systems, more efficient resource allocation, and automation of infrastructure management.