Data Fragmentation & Integration Companies stored key metrics like recruitment rates, financial data, and operational KPIs in separate systems, making reporting inconsistent and time-consuming. The product solves this by gathering data from HR tools, CRMs, financial records, and internal databases, ensuring all information is accurate, consistent, and accessible in one place.

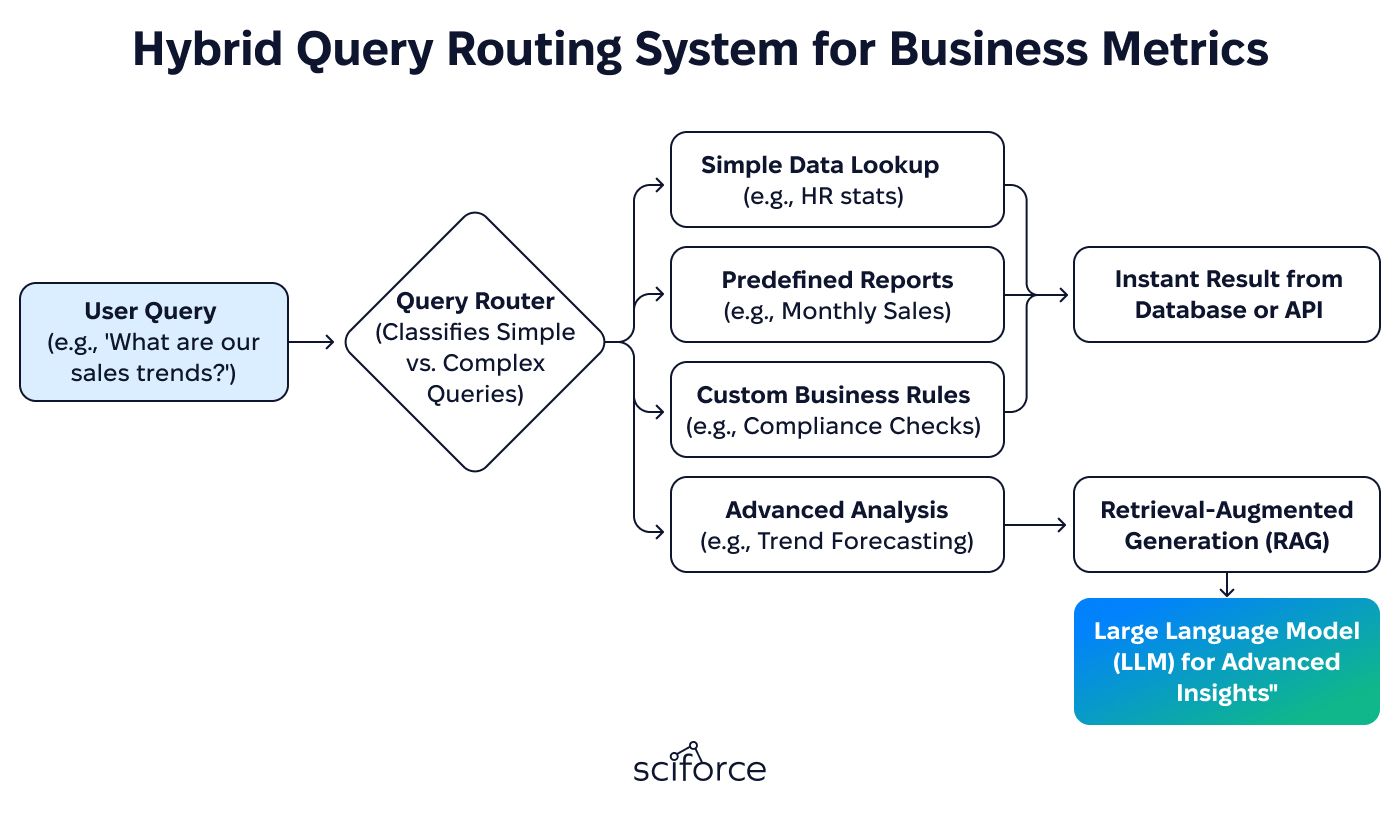

Response Time Optimization Frequent LLM usage led to slow response times and high costs, especially for simple queries. The challenge was to reduce latency without losing functionality. The solution involved routing basic requests (e.g., retrieving employee stats or sales data) through faster search methods, while reserving the LLM for complex tasks like summarizing reports or analyzing trends.

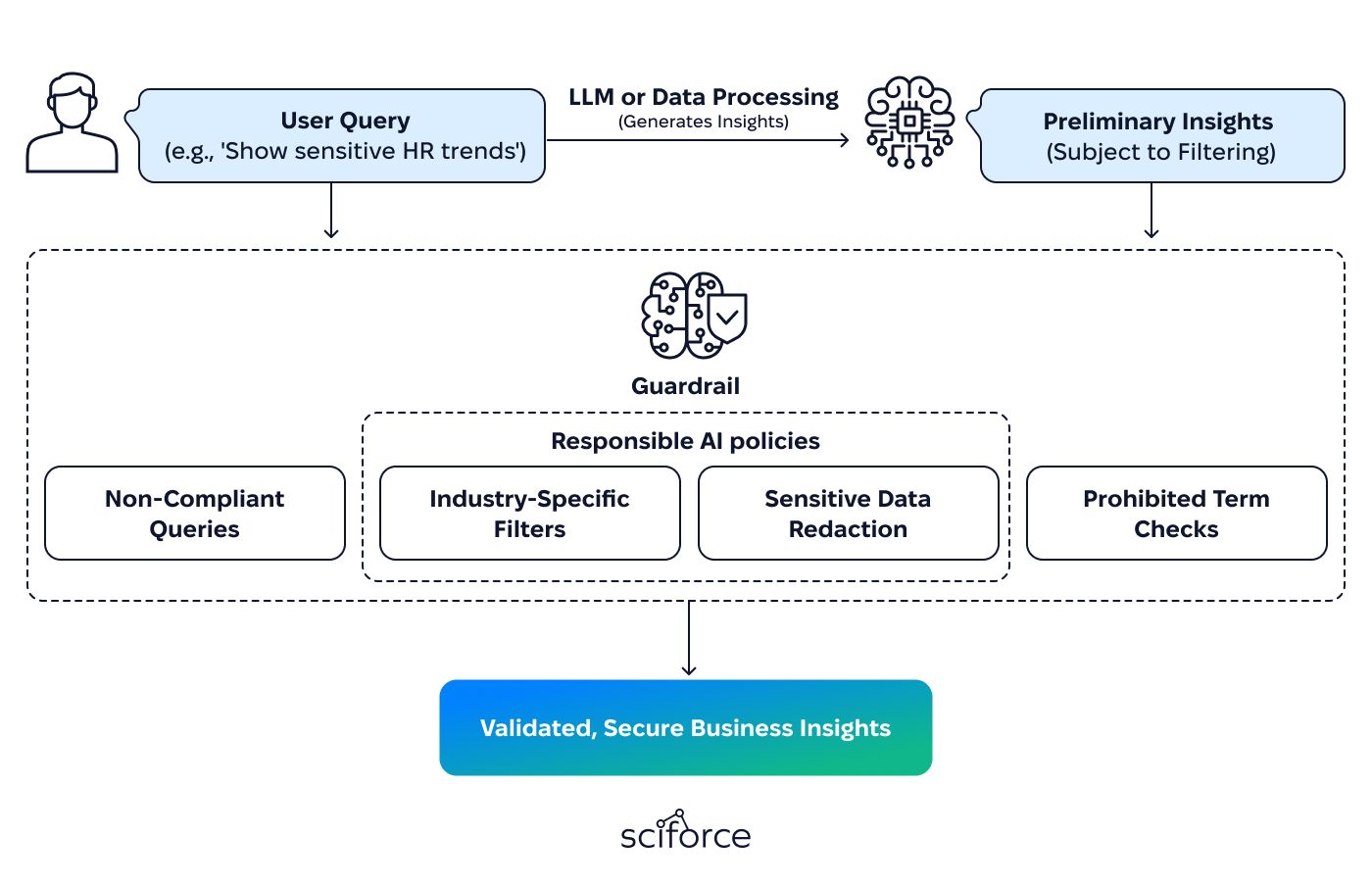

Ensuring Response Accuracy & Relevance The LLM sometimes produced incorrect or misleading answers, which could affect business decisions. The challenge was to prevent errors and ensure responses matched each client’s industry and data. To solve this, we added query filters to remove irrelevant questions, validation checks to verify responses, and customization to keep answers industry-specific.

Implementing Guardrails & Security Filters The LLM needed safeguards to prevent data leaks, irrelevant answers, or inappropriate content. The challenge was to control responses without limiting usability. To solve this, we built guardrails that filter queries and responses based on client rules, block unauthorized data access, and enforce security policies.

Handling Unstructured Data Efficiently Clients needed a way to extract useful information from PDFs, images, and web pages, but using LLMs for everything was too slow and expensive. The challenge was to process data efficiently without unnecessary AI costs. To solve this, we used traditional parsers for well-structured content and applied LLMs only when data was messy, ambiguous, or needed deeper understanding.

Optimizing AI Resource Usage Using LLMs too often put a strain on system resources and made scaling expensive. The challenge was to use AI efficiently while keeping performance high. To solve this, we handled simple queries with faster, rule-based methods and used LLMs only for complex tasks like summarization and predictive insights. This reduced resource usage and made the system more scalable.

Model Evaluation & Performance Benchmarking Finding the right balance between LLM accuracy, speed, and cost was a challenge. Using AI too often made the system expensive, while limiting it could reduce response quality. To solve this, we tested different LLM setups, hybrid models, and response times to determine the most efficient approach.

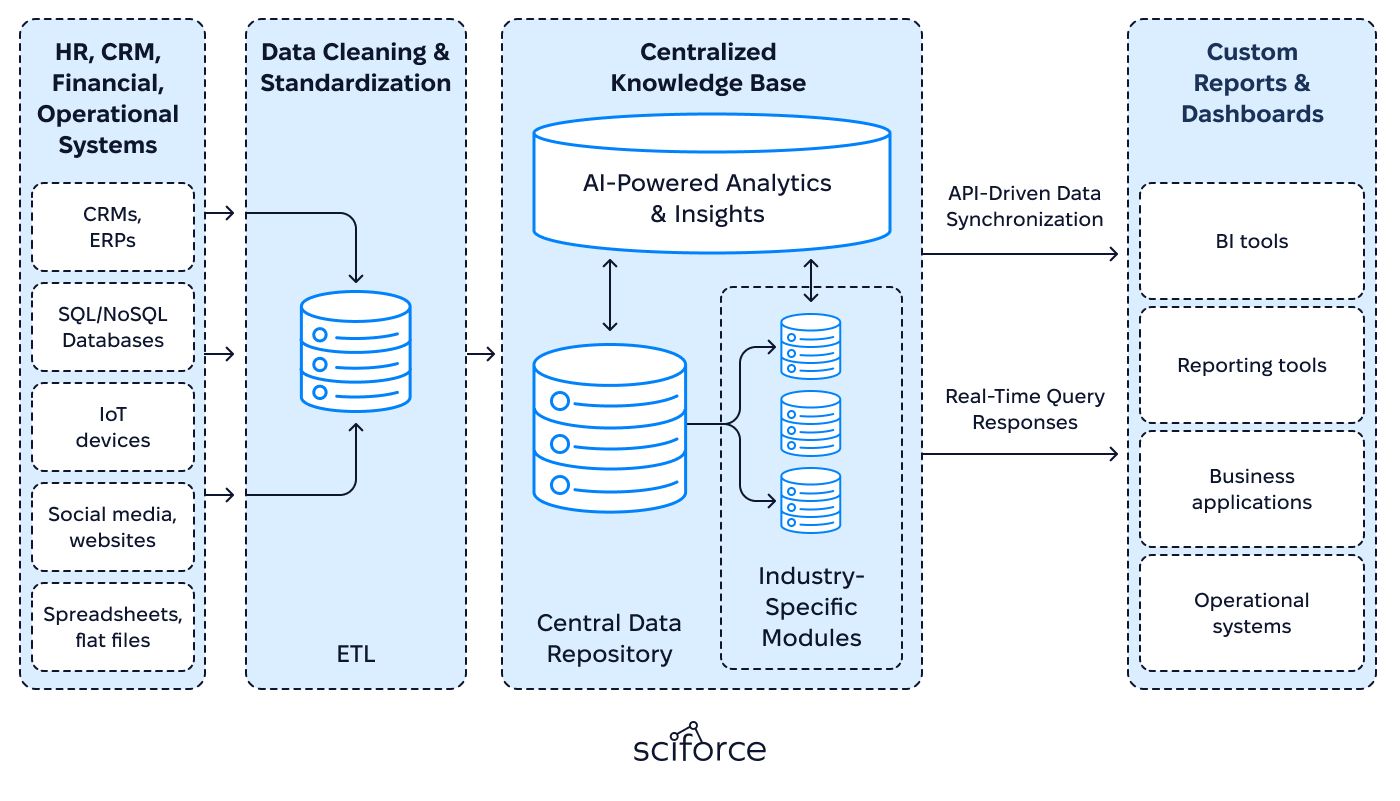

Data Integration & Standardization The system collects, cleans, and standardizes data from HR, financial, CRM, and operational systems, ensuring accuracy and consistency. It removes duplicates, resolves inconsistencies, and unifies formats, creating a single, reliable source of truth for reporting and analytics.

Scalable & Adaptive Framework The system adapts to different industries through configurable metrics, workflows, and reports, maintaining a standardized data structure. Its modular design enables seamless integration and compliance without costly changes.

Hybrid Processing for Efficiency The system balances speed and cost by using traditional search methods for simple queries and AI for complex tasks. Routine data lookups run on fast, rule-based processes, while LLMs handle advanced analysis like summarization and trend forecasting.

Secure & Compliant Architecture The system safeguards data with access controls, encryption, and compliance measures. Role-based permissions restrict access, while audit logs track activity for security monitoring. It follows industry regulations (e.g., GDPR, SOC 2, HIPAA) and includes automated compliance checks to ensure data protection.

Modular & API-Driven Design Built on a microservices-based, API-first architecture, the system ensures scalability and seamless integration with existing tools. Its modular structure allows businesses to adapt features, connect with third-party systems, and scale efficiently without major redevelopment.

Flexible Deployment Options The system can be deployed in the cloud or on-premises, depending on business needs. Cloud deployment offers scalability and easier maintenance, while on-premises deployment provides greater control over data and security, ensuring compliance with IT and regulatory requirements.

Centralized Data Management & Customization The system aggregates and standardizes company-wide performance metrics across departments, ensuring consistent reporting and analysis. It adapts to various industries with configurable settings, allowing businesses to tailor features without full customization. A scalable architecture ensures flexibility, enabling companies to activate only the necessary modules.

AI-Powered Insights & LLM Chatbot for Data Retrieval The AI-driven chatbot allows users to query business metrics in natural language, eliminating the need for manual navigation. Predictive analytics forecasts trends based on historical data, while Retrieval-Augmented Generation (RAG) ensures responses are accurate, data-driven, and formatted as summaries, detailed reports, or visual charts.

Guardrails & Hybrid Query Processing for Cost Efficiency

The system includes security guardrails that filter queries and responses to prevent data leaks and non-compliant outputs. Role-based access ensures users only retrieve authorized data, while hybrid query routing optimizes performance by handling simple lookups with fast search methods and reserving LLM processing for complex analytical tasks, reducing operational costs.

Structured Knowledge Base & System Integrations Each client has a dedicated knowledge base that stores data in a vectorized format, eliminating the need for LLM retraining while maintaining data isolation and security. The system integrates with HR platforms, CRMs, financial tools, and operational databases, using API-based access to synchronize business intelligence insights in real-time.

Error Handling & Unstructured Data Processing An intelligent error-handling system converts raw API errors into user-friendly messages with actionable solutions, providing context-specific troubleshooting. For unstructured data, LLM and traditional parsers extract insights from PDFs, images, and web pages, ensuring efficient document processing while balancing AI usage with cost-effective alternatives.

Initial State – Legacy System & Limitations The original system was built on RasaChatbot, requiring separate machine learning models for each client, making it resource-intensive, slow, and difficult to scale. Performance was inconsistent, and data retrieval was inefficient, especially when handling large datasets across multiple sources. Customizing the system for different industries was manual and costly, limiting flexibility.

Redesign & Optimization To improve efficiency, we eliminated the need for individual ML model training, instead implementing vector search, similarity-based retrieval, and classical algorithms for simple queries. LLM resources were reserved for complex tasks, such as trend analysis and contextual summaries, reducing operational costs. Query routing logic was optimized to ensure that only relevant requests reached the LLM, while direct lookups handled structured data instantly.

Integration with Other Services We connected the chatbot system with multiple company sub-products, including HR platforms, CRMs, financial databases, and operational systems, creating a centralized knowledge base for real-time insights. An API-based architecture enabled seamless data exchange, improving efficiency and ensuring compatibility with external enterprise tools.

Prototyping & Testing We developed a prototype using various LLMs, evaluating both API-based and local deployment options. API-based models included GPT-4, GPT-4o, and GPT-4o-mini, with the final choice being GPT-4o-mini due to its balance of performance and cost. For locally deployed models, we tested Dolly 2, Falcon, LLaMA 2 (Auto-GPTQ), Mistral, and Mixtral.

Benchmarking was focused on three key criteria:

A/B testing was conducted to compare query processing methods, ensuring an optimal balance between speed, cost-efficiency, and text generation quality. The selected models were fine-tuned to optimize performance without unnecessary computational overhead.

Implementing Guardrails & Security Layers

To ensure compliance and prevent misinformation, we introduced query moderation, response validation, and security guardrails. Access controls, anomaly detection, and predefined client policies were enforced to restrict unauthorized data exposure, while response validation mechanisms filtered misleading or irrelevant outputs before reaching end users.

Final Testing & Deployment A thorough end-to-end testing phase ensured system stability, accuracy, and scalability. We optimized query handling to reduce latency, deployed custom client configurations, and stress-tested the chatbot under high query loads. Comprehensive documentation and training materials were provided to facilitate client onboarding.

Post-Launch Enhancements Following deployment, we introduced optional data anonymization for privacy compliance, configurable response formatting, and real-time monitoring for proactive issue resolution. Continuous client feedback and iterative improvements helped refine system performance, expand functionality, and enhance user experience over time.

Data Integration & Accuracy Unified HR, CRM, financial, and operational metrics into a centralized knowledge base, reducing manual reconciliation by 58% and ensuring real-time, consistent reporting. Security filters and validation mechanisms cut AI hallucination rates by 68%, making responses more accurate, industry-specific, and compliant with business policies.

Performance Optimization & Cost Efficiency The hybrid query routing system reduced LLM usage by 37-46%, improving latency by 32-38% for simple lookups while reserving AI for complex analytical tasks. Intelligent resource allocation lowered AI processing costs by 39%, ensuring scalability without excessive computational expenses.

User Experience & Accessibility The AI-powered chatbot enabled natural language interactions, reducing dashboard navigation time by 47% and increasing data retrieval efficiency for executives and analysts.