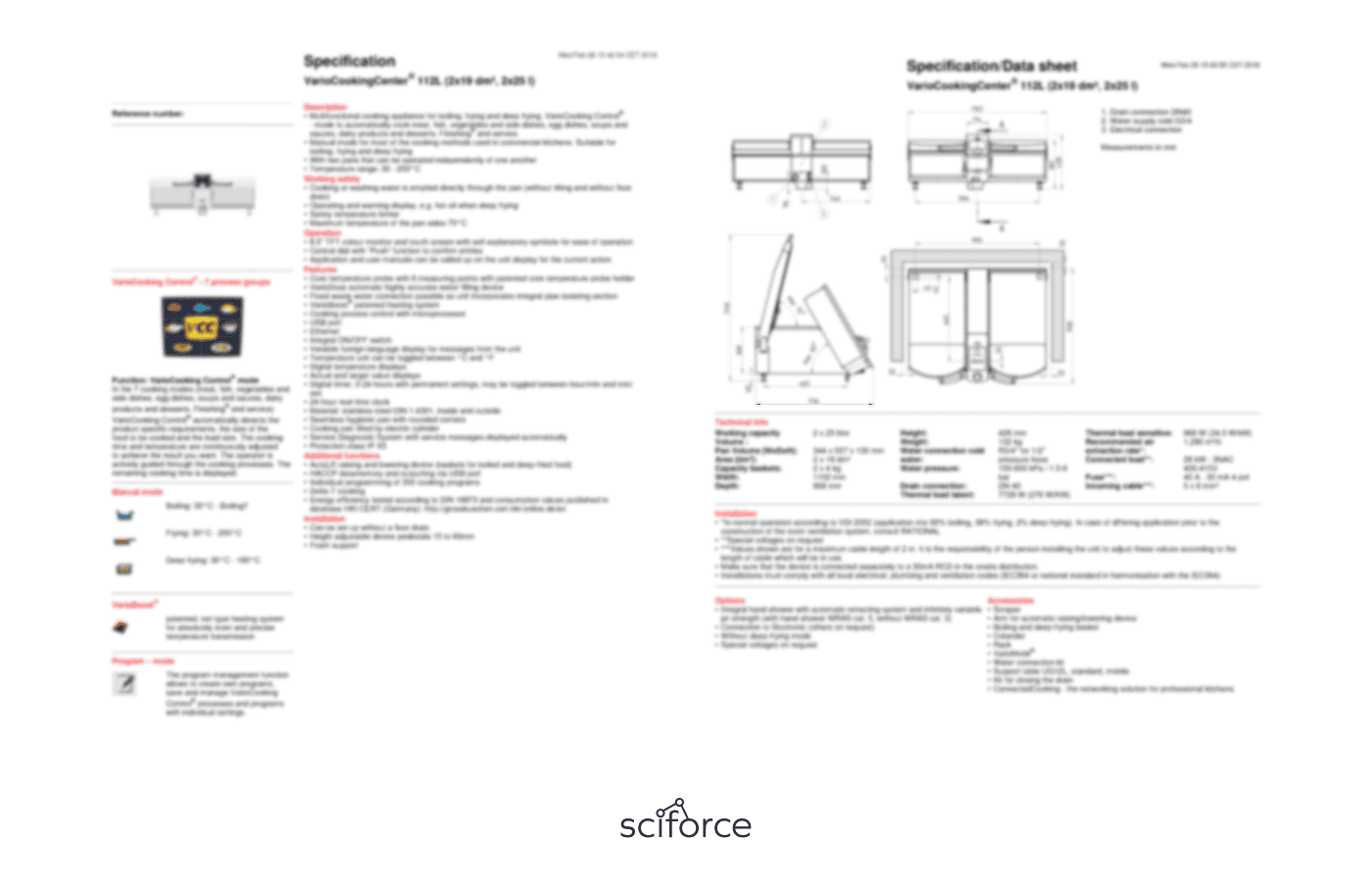

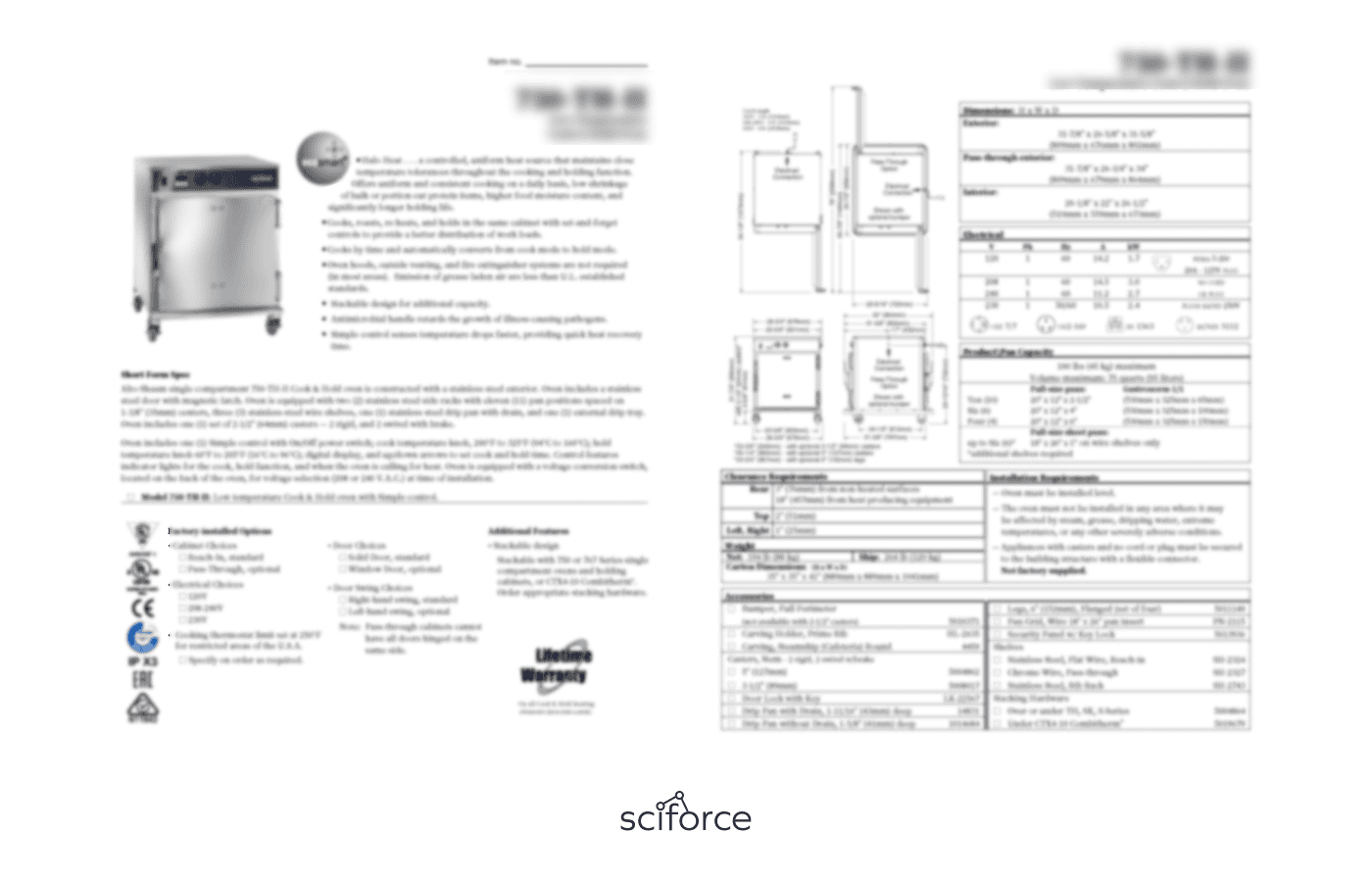

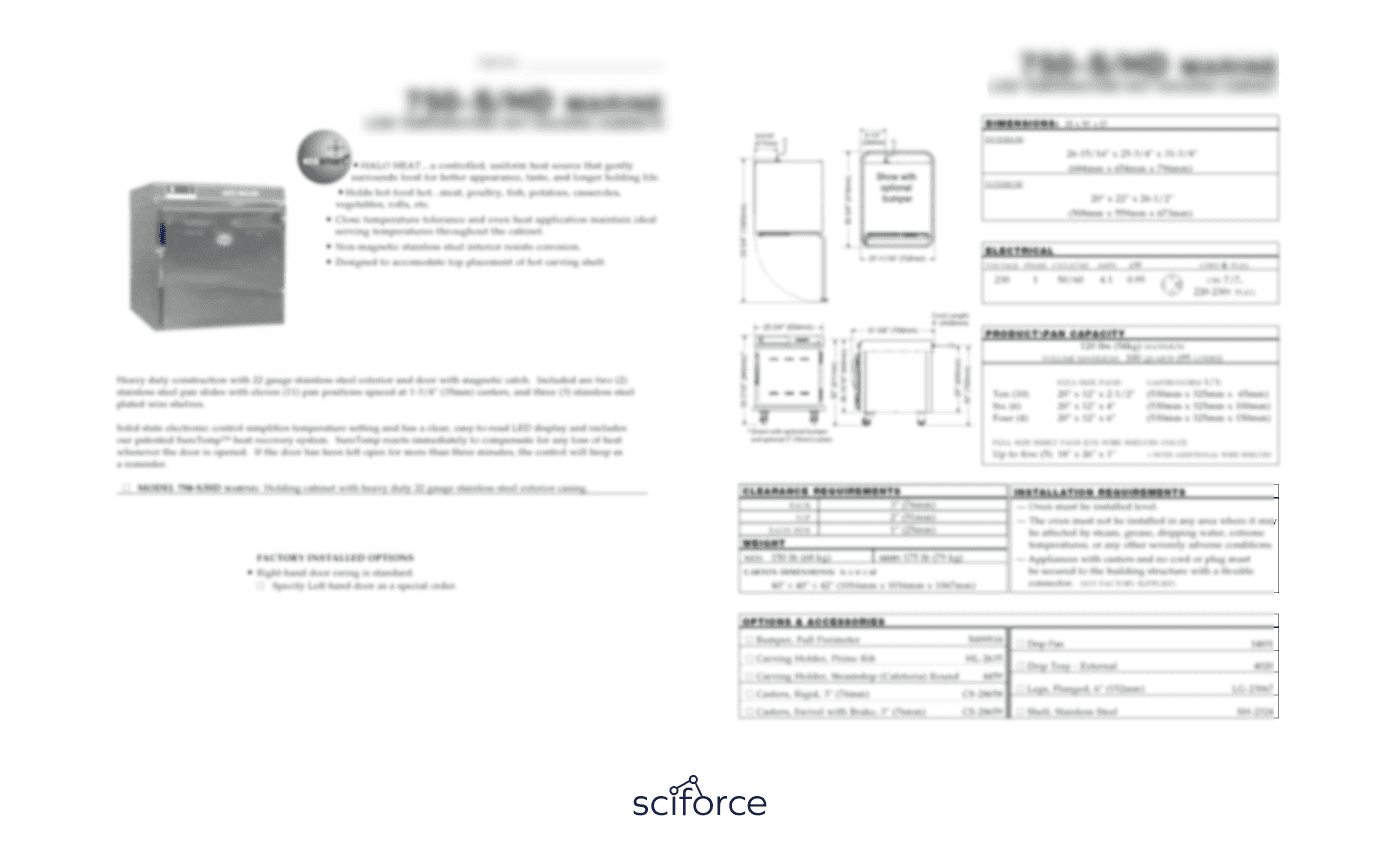

Our task was to automatically extract data fields from spec sheets that often contained multiple model numbers with shared details and a table detailing the differences between them. Complicating matters further, the data in these sheets had been generated using an OCR service and machine learning algorithms, resulting in heterogeneous data and tables that weren't always in a clear format.

To address this challenge, we developed two distinct approaches:

Plan A

For the scenario when the client provides texts and categories, we match manually filled text with corresponding locations in the spec sheet. Our solution is a script that takes a pdf document and manually filled data as input and outputs bounding boxes and page numbers for each manual filling. The pipeline for text processing is as follows:

Step 1. Extract text with bounding boxes using custom OCR for scanned docs and a PDF reader for text-based docs.

Step 2. Select candidates for matching manual data (spec param names, values, and model names) with corresponding outputs from the OCR.

Step 3. Rank candidates for matches using Levenstein distance and other methods.

Plan B

For the scenario when the client provides only the expected spec categories for each document, we automatically fill in values and units of measurements for specs and predict their locations. Our solution is a script that takes a pdf document and specified spec categories as input and outputs bounding boxes, page numbers, spec values, and units of measurement for each spec category. If the script is not confident enough, it outputs several candidates for human review. The pipeline for this scenario is as follows:

Step 1. Extract text with bounding boxes with the custom OCR for scanned docs and a PDF reader for text-based docs.

Step 2. Find approximate locations of specs values by extracting and clustering locations of numbers, units of measurements, names of the categories and their synonyms. This step requires operations to find the most meaningful clusters:

— Option a: Build a cluster graph using Chinese whispers, or other clustering algorithms where nodes are words and edges are distances between their bounding boxes.

— Option b: Assign a number of words in some predefined range (in pixels) to each word. The words with the highest numbers will be centers of clusters.

Step 3. Associate clusters with products. For this, we need to perform the following operations:

Step 4. Select clusters that potentially contain necessary specs and extract information from them:

Additional useful tricks

To increase the accuracy of clustering and information extraction, we use several tricks, such as extracting important words for each document and generating distributions of spec values to detect anomalies. Overall, our approach allowed us to extract data from semi-structured spec sheets in a fast, reliable, and scalable way.

We've developed a system that can extract fields from spec sheets using an algorithmic pipeline. Although a pure ML solution based on attention models is possible, it may be less reliable and more time-consuming.