Ever wonder why so many machine learning (ML) models never see the light of day? Despite their huge potential, only 32% of data scientists say their models usually get deployed. Even more shocking, 43% report that 80% or more of their models never make it into production. This means many businesses miss out on the full value of their AI projects. Imagine a retail company spending months developing a sophisticated customer recommendation system, only for it to never be implemented—losing out on potential sales and customer satisfaction.

Maximizing return on investment (ROI) in AI projects is crucial, and that's where MLOps (Machine Learning Operations) comes in. MLOps is the key to solving deployment issues and making sure ML models are implemented and managed effectively to deliver the best ROI.

This article will explore how MLOps can bridge the gap between ML model development and deployment, turning AI investments into real-world successes. Discover the benefits of MLOps, its core components, and best practices to help your organization maximize ROI. And if you want to learn more about settling MLOps for the first time, check out our earlier article.

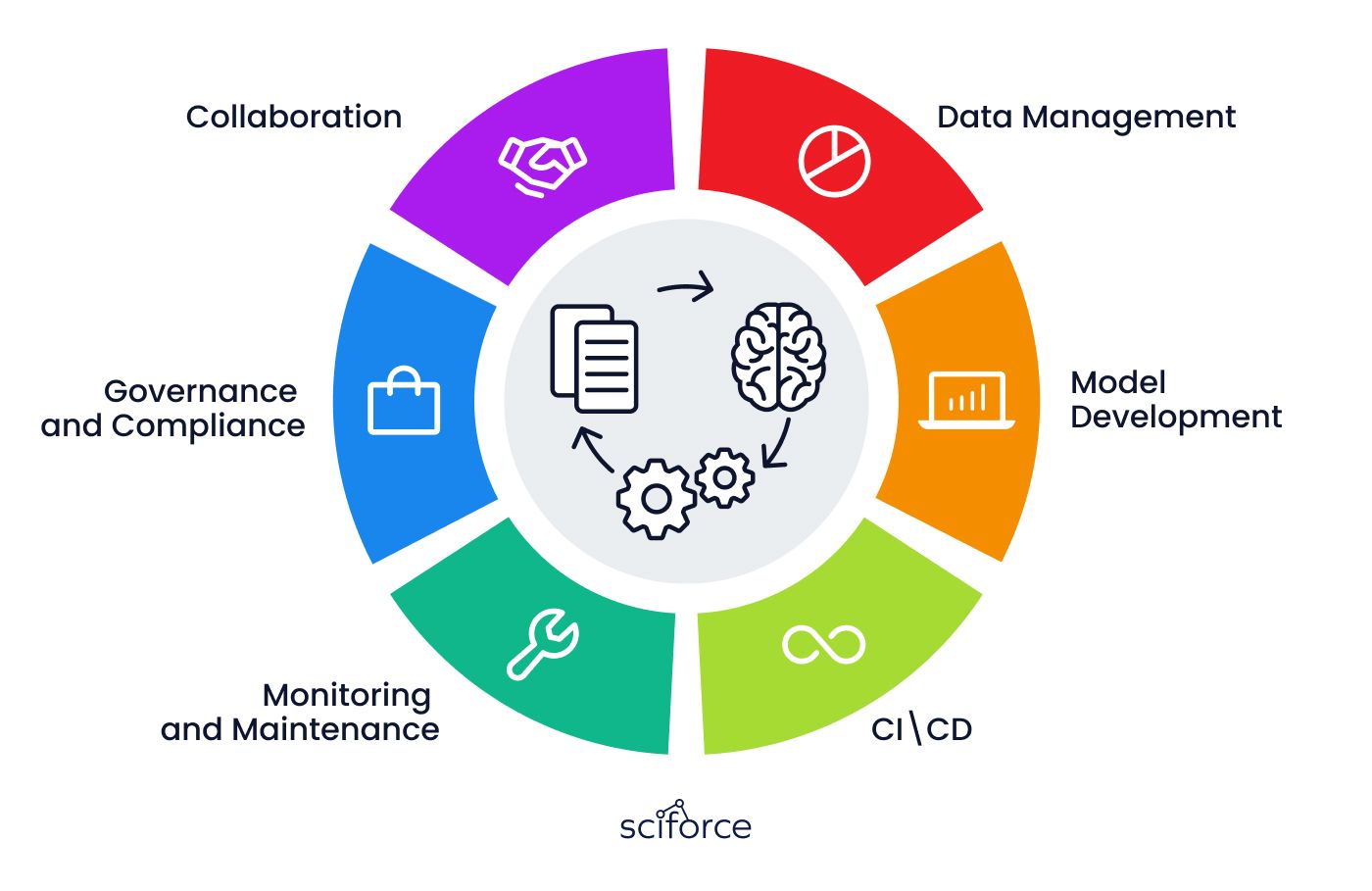

MLOps, or Machine Learning Operations, is a set of practices that combines machine learning, DevOps, and data engineering to deploy and maintain ML models in production reliably and efficiently. It aims to streamline the ML lifecycle, ensuring models are developed, deployed, monitored, and managed effectively. The key components of MLOps include:

- Data Management:

Ensuring high-quality data collection, cleaning, preprocessing, versioning, and lineage tracking.

- Model Development:

Facilitating training, validation, and experimentation with robust experiment tracking and reproducibility.

- Continuous Integration and Deployment (CI/CD):

Implementing automated testing and deployment pipelines for ML models to ensure seamless integration and continuous delivery.

- Monitoring and Maintenance:

Continuously tracking model performance, identifying when retraining is needed, and updating models to maintain accuracy and relevance.

- Governance and Compliance:

Establishing policies and procedures to manage the ML model lifecycle, ensuring adherence to organizational standards, industry regulations, and ethical guidelines.

- Collaboration:

Facilitating effective communication and teamwork among data scientists, engineers, and business stakeholders to enhance productivity and align with business objectives.

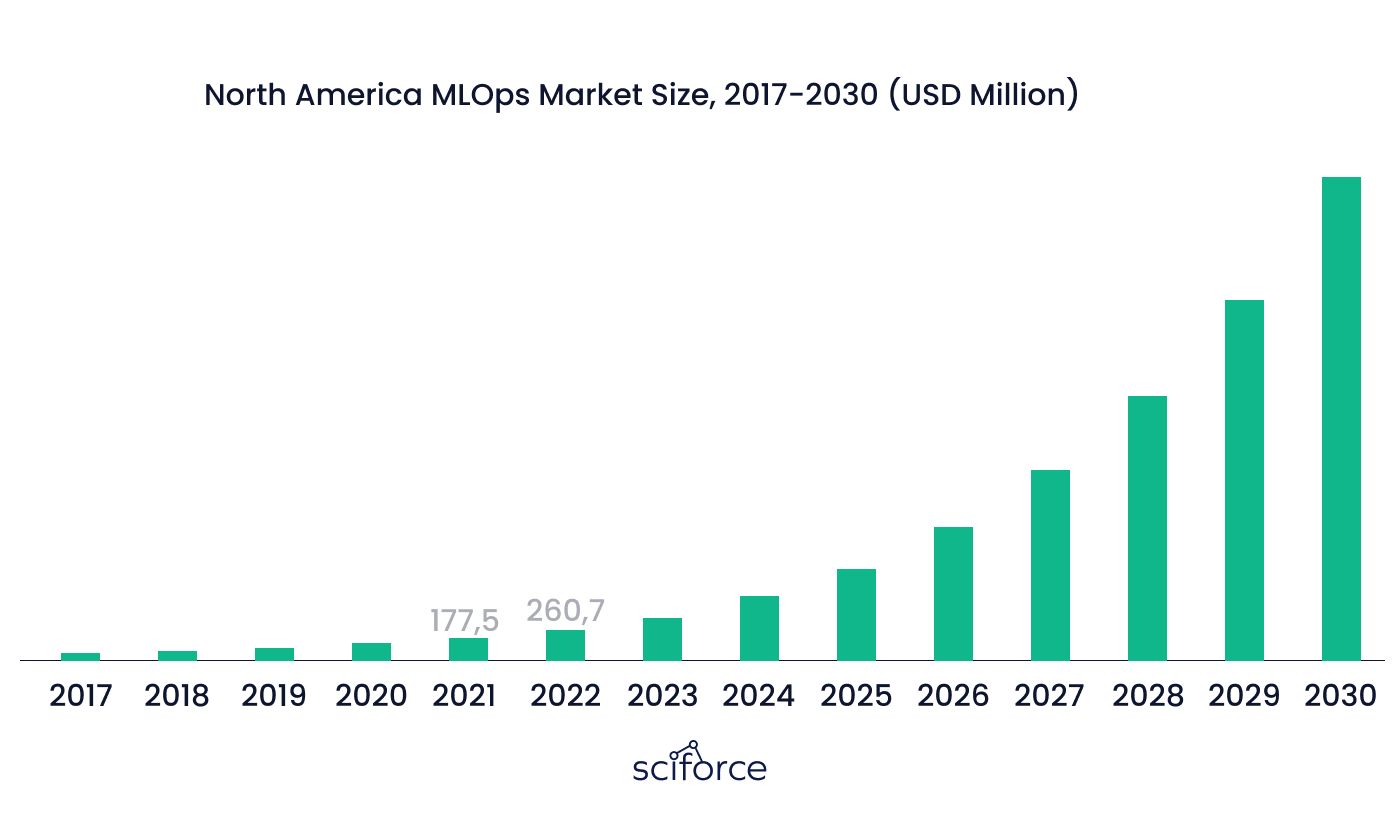

The MLOps market is booming. Valued at $720 million in 2022, it's expected to skyrocket to $13.32 billion by 2030, growing at an annual rate of 43.5%. This rapid growth shows that more organizations are jumping on the MLOps bandwagon to make the most of their ML models.

Return on Investment (ROI) in AI and ML projects measures the financial benefits derived from these projects relative to their costs. High ROI in AI projects indicates that the investments in developing and deploying ML models generate substantial value, such as increased revenue, cost savings, improved efficiency, and enhanced customer satisfaction.

However, achieving high ROI in AI projects can be challenging due to several factors:

- Deployment Failures:

Many ML models fail to make it into production, limiting their ability to deliver value. According to McKinsey, only 15% of ML projects succeed, with just 36% going beyond the pilot stage.

- Operational Inefficiencies:

Without proper practices, ML projects can become time-consuming and resource-intensive.

- Scalability Issues:

Scaling ML models across different business units and functions can be difficult without a systematic approach.

- Maintenance and Monitoring:

Ensuring models remain accurate and relevant over time requires ongoing monitoring and maintenance.

MLOps plays a crucial role in addressing these challenges and improving the ROI of AI and ML projects. Here's how:

- Increased Deployment Success Rates:

By automating the deployment process and ensuring models are seamlessly integrated into production environments, MLOps significantly increases the likelihood that ML models will be successfully deployed and maintained. Companies using MLOps and data analytics can see a 30% increase in ROI.

- Efficiency and Cost Savings:

MLOps streamlines workflows, reduces manual interventions, and automates repetitive tasks, leading to lower operational costs and more efficient use of resources.

- Improved Model Performance:

Continuous monitoring and maintenance of models ensure they perform reliably and accurately, delivering better results and more value over time.

- Faster Time-to-Market:

MLOps accelerates the development and deployment cycle, enabling quicker innovation and allowing businesses to capitalize on opportunities faster.

- Scalability:

MLOps supports the scalable deployment of ML models across various business units and functions, ensuring consistent performance and value generation.

McDonald's, the backbone of America's fast-food industry, generates approximately $7.84 billion across more than 13,800 restaurants in the United States. With a significant presence in high-density urban areas such as New York City, McDonald's employs adaptive strategies to thrive in dynamic environments. As McDonald's expanded its third-party food delivery services across 40 markets on five continents, they encountered several challenges:

- Disparate Data Sources:

Integrating data from numerous third-party food delivery partners into a unified and cohesive system.

- Performance Tracking:

Ensuring accurate measurement and tracking of food delivery operations to maintain sufficient inventory, adequate staffing, and timely deliveries.

- Operational Insights:

Transforming vast amounts of raw data into actionable insights for continuously improving delivery operations and maintaining control over revenue and customer brand loyalty.

McDonald's tackled their data challenges by developing a robust AI-driven solution to streamline their food delivery operations.

Data Integration and Processing

McDonald's developed a data model and ETL process to aggregate and normalize data from various third-party food delivery partners into a centralized data warehouse. By consolidating disparate sources—such as delivery times, customer feedback, and transaction details, McDonald's gained comprehensive, real-time operational intelligence.

Model Development and Deployment

McDonald's developed advanced machine learning models to analyze performance metrics such as sales, delivery times, customer feedback, and fees. They implemented CI/CD (Continuous Integration and Continuous Deployment) pipelines using tools like Jenkins and Docker to automate testing, integration, and deployment of these models.

Real-Time Monitoring and Anomaly Detection

McDonald's set up a real-time monitoring system to track metrics like route time, restaurant wait time, and fulfillment time. Advanced algorithms detected anomalies, enabling quick responses to issues such as delivery delays and customer satisfaction drops, ensuring consistent high service standards.

Implementing MLOps brought transformative improvements to McDonald's operations, resulting in higher sales, enhanced model accuracy, and significant cost savings.

Increased Sales and Customer Satisfaction

By utilizing MLOps to analyze customer demographics and preferences, McDonald's has increased total food delivery orders, which now account for more than 10% of sales in locations that offer delivery. The average dollar value per transaction for food delivery is significantly higher than for non-food delivery.

Improved Model Performance

By targeting neighborhoods with household incomes between $50,000 and $77,000, and using MLOps to continuously analyze and adjust their strategies, McDonald's ensured their models performed reliably and accurately.

Cost Savings

By optimizing inventory management and staffing based on real-time data insights, McDonald's achieved substantial cost savings. Effective project tiering and resource management further contributed to cost reduction.

Revolut, a UK fintech company, developed Sherlock, a machine learning-based fraud prevention system, to counter the growing threat of financial fraud. Sherlock continuously and autonomously monitors card users’ transactions, and if it finds a suspicious transaction, it sends the user a push notification for their approval, solving the following challenges:

- Evolving Fraud Techniques:

Fraudsters are continually developing new methods to bypass traditional fraud detection systems.

- High Availability and Throughput:

A mission-critical application requiring consistent high availability and high throughput for its rapidly growing customer base.

- Financial Cost of Fraud:

On average, financial fraud costs institutions between 7-8 cents out of every $100 processed.

The implementation of Sherlock involved several key components:

Data Collection and Preprocessing

Revolut continuously gathers transactional data, including payment history, transaction amounts, merchant details, and geolocation data. The data is cleaned and structured using ETL (Extract, Transform, Load) pipelines to prepare it for model training and analysis.

Model Development and Deployment

Revolut uses ML algorithms, such as ensemble methods and deep learning models, to build Sherlock's fraud detection system. CI/CD pipelines automate testing, integration, and deployment of new model versions, keeping Sherlock up-to-date.

Real-Time Monitoring

Revolut monitors key performance metrics like detection accuracy, false positive rates, and system latency in real-time. This enables the team to quickly identify and address anomalies or performance issues.

Anomaly Detection

Sherlock uses advanced algorithms to detect unusual patterns indicating fraud. Automated responses are triggered, flagging suspicious transactions for further investigation or temporarily blocking them until verified.

Implementing Sherlock brought significant improvements to Revolut's fraud detection and prevention efforts, resulting in high detection accuracy, substantial cost savings, enhanced operational efficiency, and superior performance.

High Detection Accuracy

Sherlock's high-speed caching enabled machine learning algorithms to continuously learn and adapt, resulting in 96% of fraudulent transactions being detected in real-time.

Cost Savings

Within the first year, Sherlock saved Revolut over $3 million by preventing fraud. The system ensures that just 1 cent out of $100 processed is lost to fraud, significantly lower than the industry average.

Operational Efficiency

By “delegating” the process of authenticating suspicious transactions to users in real-time, Revolut reduces the need for extensive support staff. Despite this, users receive push notifications from Sherlock only 0.1% of the time.

Performance Improvement

75% improvement over industry standards was achieved in transaction processing speeds, maintaining high availability and scalability for the growing user base.

Walmart, a global retail giant, serves approximately 240 million customers each week through its 10,500 stores and e-commerce websites across 19 countries. To manage the complex logistics of its supply chain, which includes over 100,000 global suppliers, 150 distribution centers, ocean freighters, air cargo, and one of the largest private fleets of trucks in the world, Walmart has integrated artificial intelligence (AI) and machine learning (ML). Despite the benefits of AI and ML, scaling these solutions posed several challenges:

- Vendor Lock-ins:

Dependence on specific cloud providers, leading to limited flexibility.

- High License Costs:

Exorbitant fees associated with proprietary tools.

- Limited Availability and Reliability:

Issues with ensuring consistent performance across different platforms.

- Customization:

Difficulties in tailoring solutions to specific business needs.

Walmart harnesses the power of AI with their tool, Element, to optimize various aspects of their operations. Element aids data scientists in evaluating sales data, enhancing search functionality, analyzing market intelligence, and improving last-mile delivery.

Channel Performance

Walmart provides an AI-powered tool for channel partners to evaluate sales data, promotions, and shelf assortments. Element helps data scientists perform feature engineering and ML modeling on individual items, reducing training time and costs per supplier.

Search Optimization

Element enhances the search functionality on Walmart.com by enabling data scientists to train models on complex hypotheses and large feature sets. Improved hyperparameter tuning accelerates experiments, allowing rapid iteration and optimization of search results.

Market Intelligence

Element supports Walmart's Market Intelligence solution, which analyzes competitor pricing, assortment, and marketing strategies. GPU-enabled notebooks and an inference service from Element facilitate quick ML model development and deployment.

Last Mile Delivery

The intelligent driver dispatch system, built on Element, optimizes delivery by matching drivers to trips efficiently. This reduces delivery costs and lead times while maintaining high on-time delivery rates.

Implementing Element has significantly enhanced Walmart's operational efficiency, automation impact, and cost savings.

Operational Efficiency

By leveraging Element, Walmart reduced the time spent on evaluating vendors and tools, lowered start-up times, and accelerated the development, deployment, and operationalization of models. The time to operationalize models has been reduced from weeks to under an hour.

Automation Impact

Automation in the supply chain is estimated to improve unit cost averages by around 20%. Walmart plans to power 65% of its stores with automation technologies by 2026. The automation of 55% of fulfillment center volume has greatly increased efficiency and reduced operational costs.

Cost Savings

Walmart achieved substantial cost savings by avoiding vendor lock-ins and reducing licensing fees. The chatbot alone negotiated with 68% of suppliers approached, gaining 1.5% in savings and extending payment terms.

Our client aimed to develop an AI-powered tool to analyze trader behavior and predict market moves for hedgers, arbitrageurs, and speculators. Key challenges included differentiating trader types, recognizing patterns, building profiles, simulating behavior, and achieving precision.

Solution:

We created an ML-driven model using LSTM-based neural networks and XGBoost algorithms to predict trader actions (Idle, Buy, or Sell) based on transaction history. The solution included:

Data Integration:

Aggregated and normalized data from various sources into a centralized warehouse.

Model Development and Deployment:

Used LSTM and XGBoost to analyze performance metrics, with CI/CD pipelines for automated updates.

Real-Time Monitoring and Anomaly Detection:

Implemented a system to track key metrics and detect anomalies for quick response.

Impact:

Operational Efficiency:

Reduced model operationalization time from weeks to under an hour, improving efficiency by 75%.

Cost Savings:

Achieved a 20% reduction in operational costs through effective resource management and reduced computational resources.

Improved Model Performance:

Achieved 85% prediction accuracy for trader behavior, with high confidence scores for 'Buy' and 'Idle' predictions.

Increased Prediction Accuracy:

Improved prediction accuracy by 30%, leading to better trading decisions.

Enhanced Trading Strategy:

Optimized trading strategies, resulting in a 15% increase in profitable trades.

Return on Investment (ROI):

Significant ROI through improved efficiency and reduced costs, contributing to the client's bottom line with a 15% increase in profitable trades and 20% cost savings.

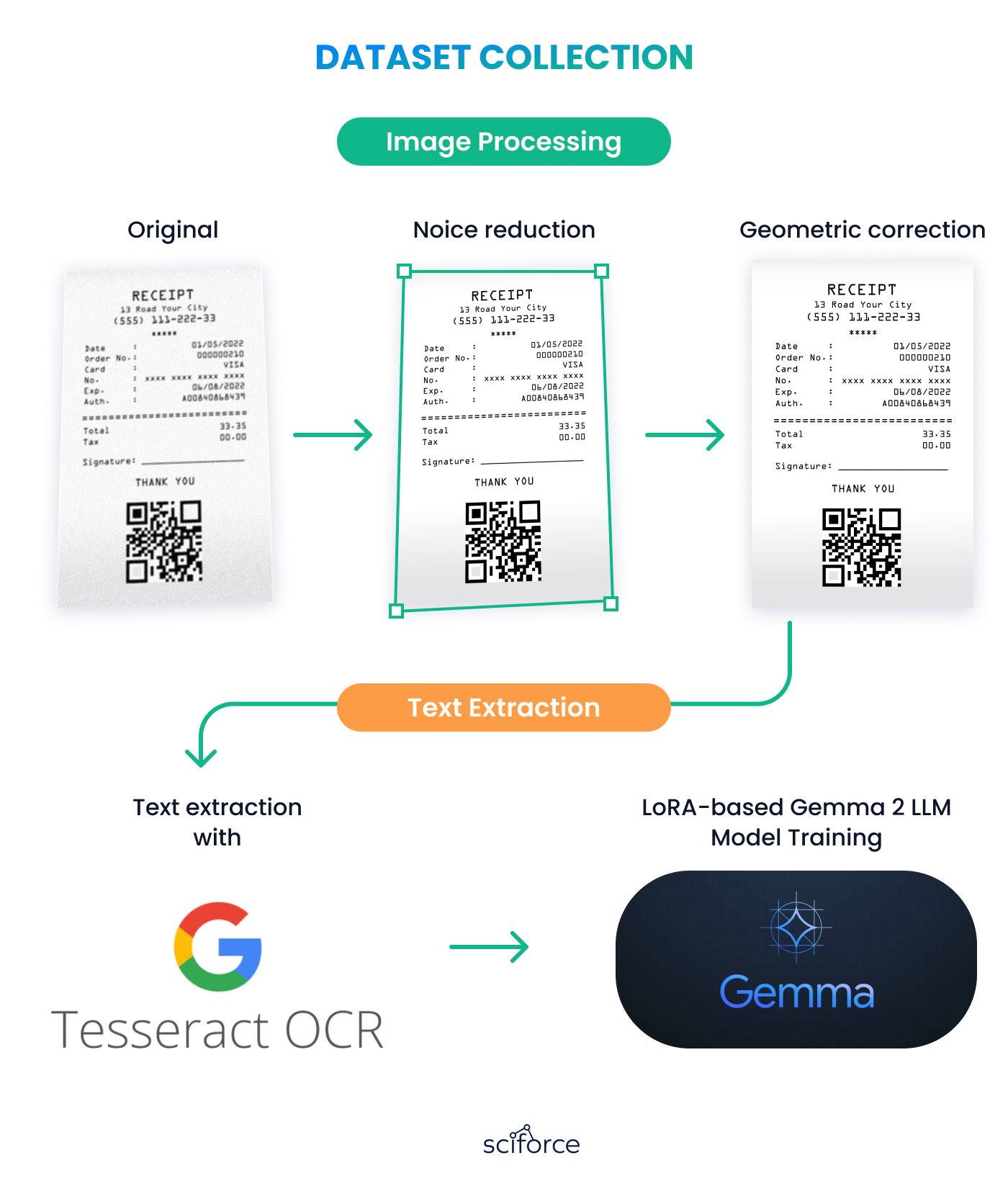

In the FinTech sector, efficiently managing diverse receipt formats for taxi services is essential to ensure data accuracy, reduce processing times, and minimize costs. Our client, specializing in digital invoicing and data management for transportation services, sought to streamline the conversion of physical taxi receipts into a standardized digital format. The challenges for us to solve were as follows:

Variability of Data:

Taxi receipts came in various formats with different layouts, languages, and structures, including horizontal and vertical item listings in both Swedish and English.

Poor Photo Quality:

Receipts were often photographed with low resolution, poor lighting, blurriness, shadows, reflections, and at awkward angles, complicating data extraction.

Data Accuracy:

Ensuring reliable data extraction from diverse and low-quality receipt images, despite inconsistent layouts and field formats.

Model Training Resources:

Training large models like LLMs required substantial computational resources, including high-performance GPUs and extensive memory.

Integration and Scalability:

Integrating processed data into existing systems and scaling to handle millions of receipts per month.

To address these challenges, we developed an AI-powered system that leverages advanced technologies such as Optical Character Recognition (OCR), Generative Adversarial Networks (GANs), and Large Language Models (LLMs) to streamline the processing of taxi receipts. This system was meticulously designed to handle a wide range of receipt formats and varying photo quality, ensuring high data accuracy and efficient processing. Our approach included several key components:

Data Collection and Model Training:

Compiled a dataset of around 10,000 receipts in various formats, including English and Swedish, to train models for accurate recognition and adaptation using LoRA-based training to optimize resource usage while maintaining high accuracy.

Image Enhancement:

Implemented advanced techniques such as noise reduction and upsampling with GANs to improve receipt image quality before processing.

Accurate Text Extraction:

Employed OCR technology like Tesseract and trained LLMs to enhance text extraction and classification accuracy, with post-processing steps to correct errors and maintain data integrity.

Implementing the AI-powered system effectively strengthened the client's business. Here are the key results achieved:

Accuracy Improvement:

Enhanced image quality and advanced text extraction technologies improved data accuracy by 80%, ensuring reliable financial records.

Operational Cost Reduction:

With a 50% reduction in processing costs, the client achieved significant savings, directly impacting the bottom line.

Efficiency Improvements:

The 80% increase in processing speed allowed the client to handle a higher volume of transactions without additional resources, maximizing throughput and revenue potential.

Accuracy Gains:

The 80% improvement in data accuracy minimized errors and discrepancies, reducing financial risks and ensuring compliance with regulatory standards.

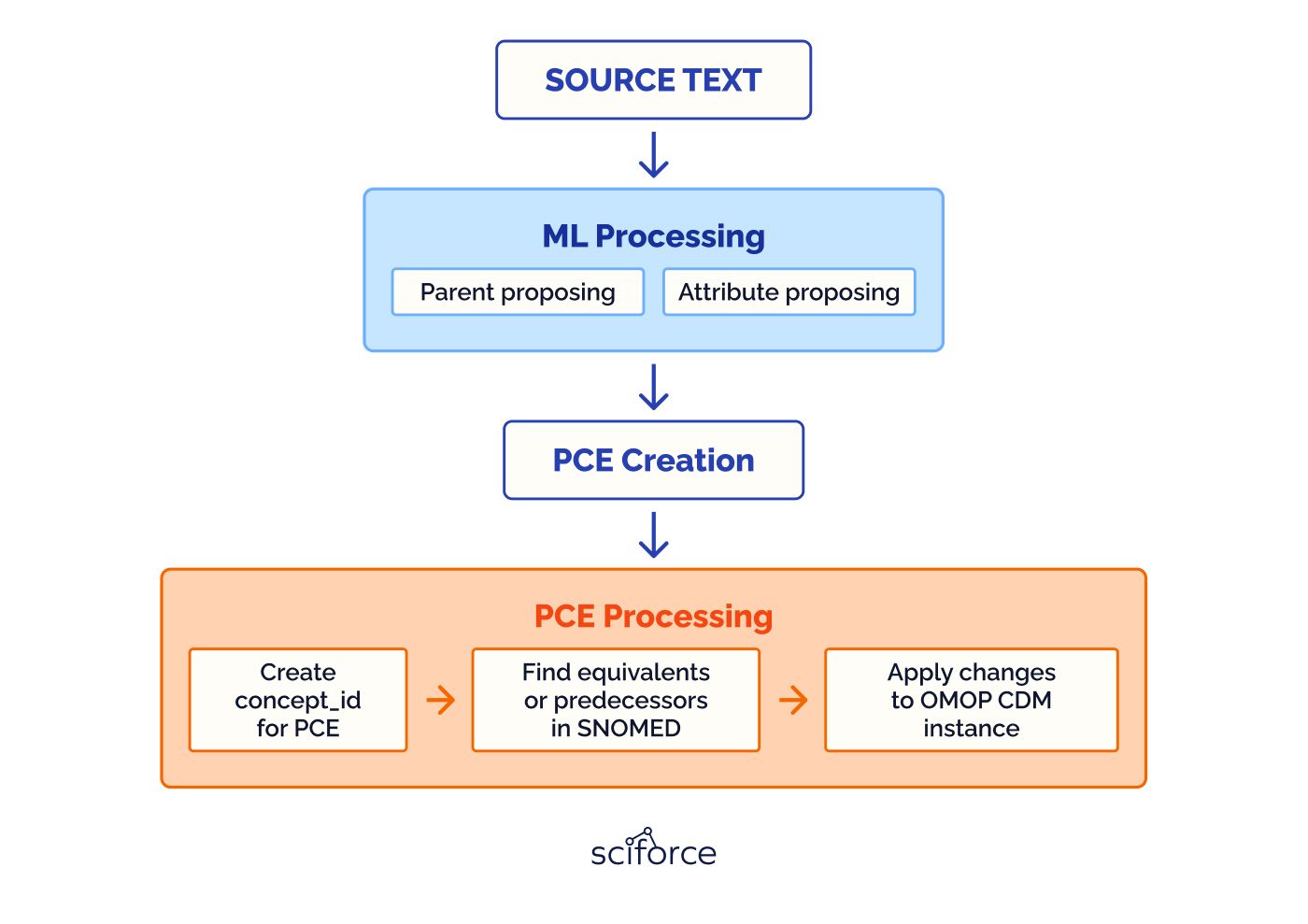

Jackalope was born out of the need to efficiently map complex medical data into standardized formats like OMOP CDM and SNOMED CT. Traditional methods were costly and time-consuming, requiring extensive manual effort and leading to data loss.

Manual Mapping Complexity:

Mapping complex medical data into standardized formats like OMOP CDM and SNOMED CT requires extensive manual effort, consuming significant time and resources.

Resource Intensive:

Mapping complex medical data into standardized formats like OMOP CDM and SNOMED CT requires extensive manual effort, consuming significant time and resources.

Lack of Automation:

Current tools do not provide the necessary automation to streamline data mapping while preserving data integrity, leading to errors and inconsistencies.

Jackalope leverages MLOps principles to automate the conversion and mapping of medical data, ensuring efficiency and accuracy. At its core, Jackalope employs machine learning models to parse and transform complex medical texts into SNOMED post-coordinated expressions.

Automated Parsing and Training:

Post-Coordinated Expressions Generation:

Integration and Standardization:

MLOps best practices

Jackalope’s development and deployment are guided by specific MLOps best practices. These practices ensure that the system is efficient, scalable, secure, and continuously improving, providing reliable support for healthcare data transformation and analysis.

Automated Pipelines

Jackalope uses CI/CD pipelines to automate the integration and deployment of machine learning models. Each update, whether it’s an improvement in the parsing algorithm or an expansion of the medical terminology database, is seamlessly integrated without disrupting system functionality. This ensures that the tool remains up-to-date with the latest medical data standards and user needs.

Robust Training

Models in Jackalope are trained on a diverse set of clinical datasets to accurately capture and transform medical attributes and semantic relationships. This training regime includes real-world clinical texts to ensure that the models perform accurately in practical settings. Rigorous validation processes, including cross-validation and real-world scenario testing, guarantee consistent performance and reliability.

Data Integrity

Jackalope ensures data integrity through comprehensive checks at every stage of the data processing pipeline. This includes validation during data ingestion, transformation, and export, ensuring that the transformed data retains its clinical accuracy and utility. Compliance with data governance standards such as HIPAA and GDPR protects sensitive medical information, ensuring legal and ethical adherence.

Scalable Architecture

The architecture of Jackalope is designed to scale horizontally, which means it can handle increasing volumes of data efficiently. By leveraging scalable cloud services and distributed computing, Jackalope maintains high performance and responsiveness, even as the volume of data grows. Continuous performance monitoring and optimization ensure that the system operates smoothly under varying loads.

Automated Workflows

Jackalope automates repetitive and time-consuming tasks such as data parsing, transformation, and model retraining. This automation significantly reduces the need for manual intervention, allowing medical professionals to focus on more critical tasks. By streamlining the ETL (Extract, Transform, Load) processes, Jackalope minimizes data conversion times, ensuring that data is quickly ready for analysis and decision-making.

Result

Automated Processes:

Jackalope's automation of data parsing, transformation, and mapping reduces manual labor by up to 70%, cutting labor costs and allowing medical professionals to focus on patient care.

Accelerated Data Processing:

ETL processes are 50% faster, enabling quicker data access and decision-making.

Improved Diagnostic Accuracy:

Precise data mapping boosts diagnostic accuracy by 30%, leading to better patient outcomes and reducing costs associated with misdiagnosis.

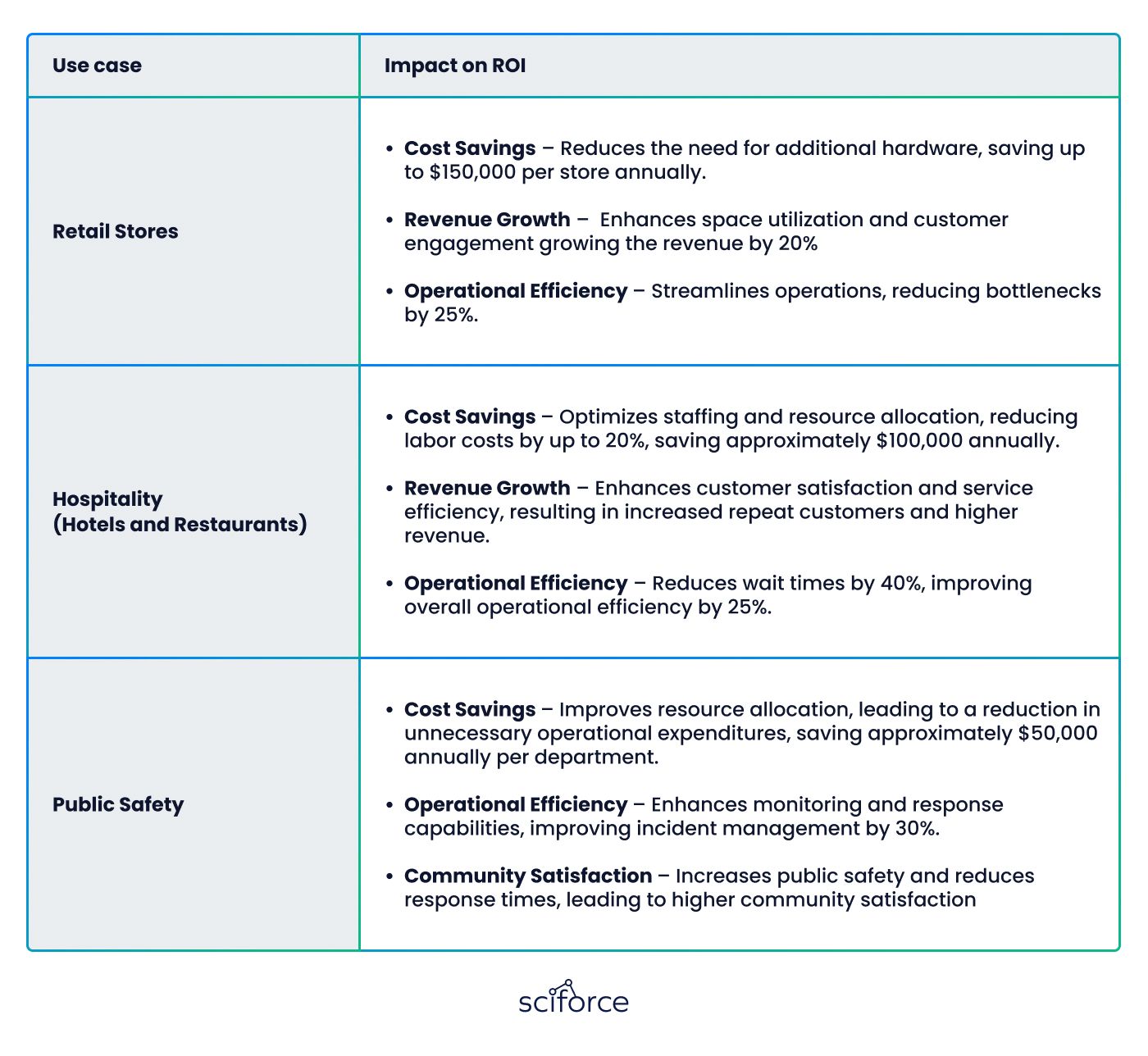

EyeAI by SciForce upgrades existing surveillance cameras to improve store layouts and manage customer queues in real-time. It’s designed for retail stores, healthcare facilities, hotels, restaurants, and public safety environments. EyeAI uses AI to analyze visitor behavior, optimize space, and streamline queues, all without needing extra hardware. This solution provides cost-effective, immediate improvements in efficiency and customer service by using the cameras you already have.

Here are the specific challenges addressed by EyeAI:

Seamless Data Integration:

Integrating and efficiently processing continuous real-time video feeds from existing camera systems.

Precision Detection:

Ensuring accurate detection in varied lighting and resolution conditions, including accurately identifying individuals with low-resolution cameras.

Scalable Deployment and Reliability:

Deploying and managing models at scale across multiple locations, maintaining performance over time, and adapting to changing conditions and new data.

MLOps best practices

EyeAI utilizes advanced MLOps practices to transform existing surveillance cameras into intelligent systems for optimizing space utilization and managing queues in real-time. This process involves creating a seamless data pipeline to handle large volumes of video data efficiently. EyeAI employs sophisticated detection models to ensure rapid and precise identification of individuals, minimizing errors.

Data Integration and Real-time Processing

Developed a robust data pipeline using scalable data processing frameworks (e.g., Apache Kafka, Apache Flink) to handle large volumes of video data. Ensured seamless integration with existing infrastructure, enabling real-time data ingestion and processing.

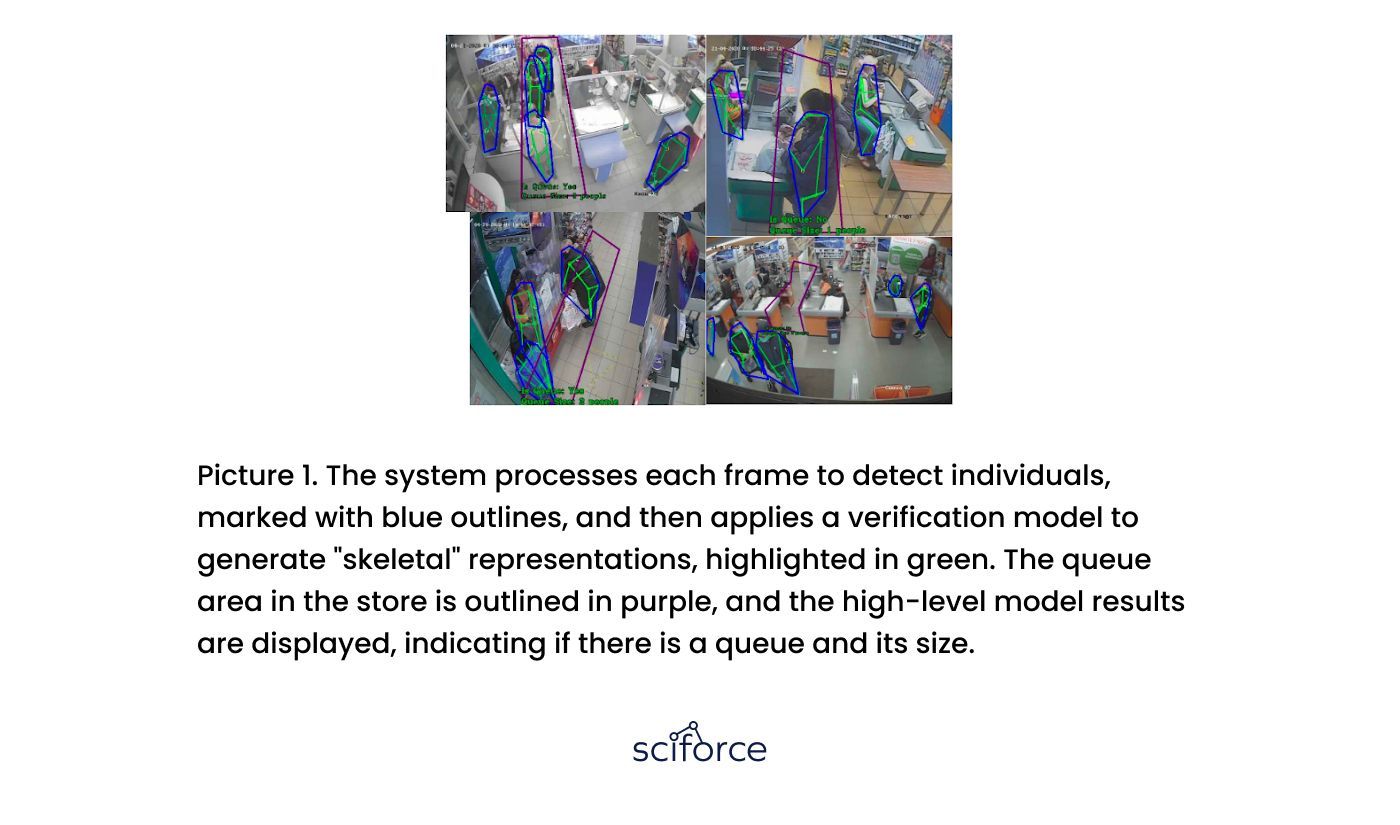

Accurate Person Detection and Verification

Implement a multi-stage detection and verification model. Use an initial high-speed detection model (e.g., YOLOv3) followed by a verification phase to filter out false positives. Continuously monitor model performance and retrain with new data to maintain accuracy.

Scalability and Model Deployment

Utilize cloud infrastructure for scalable model deployment. Implement a scheduler to manage asynchronous data processing from multiple camera feeds, ensuring efficient resource utilization. Use containerization (e.g., Docker) and orchestration (e.g., Kubernetes) for flexible and scalable deployments.

Low-Resolution Image Enhancement

Employ advanced image enhancement techniques such as PoseNet and InceptionV3 for skeletal representation. Use GAN-based super-resolution models to improve image quality. Ensure these enhancements are part of the pre-processing pipeline for consistent improvement in detection accuracy.

Continuous Monitoring and Adaptation

Implement continuous monitoring and automated retraining pipelines. Use tools for real-time performance tracking and anomaly detection. Incorporate online learning features to allow the model to adapt based on user feedback and new data, ensuring it remains relevant and accurate.

Customizable High-Level Predictions

Design high-level models that are customizable and capable of online learning. Use linear machine learning models for fast and accurate predictions. Allow end-users to define specific areas and provide feedback, enabling the model to fine-tune its predictions to meet the unique needs of each environment.

Result

Enhanced Operational Efficiency:

Real-time data processing and CI/CD practices have reduced bottlenecks by 25% and increased overall efficiency by 20%, resulting in smoother workflows and faster service times.

Optimized Space Utilization:

Machine learning models improved space utilization by 30%, leading to a 15% increase in customer engagement and a 10% boost in sales. Scalable deployment ensured consistent performance across multiple locations.

Improved Queue Management and Customer Satisfaction:

Multi-stage detection and real-time monitoring reduced average wait times by 40%. Continuous monitoring and automated retraining pipelines have increased customer satisfaction by 25% and repeat customers by 20%.

Maximizing the ROI of AI and ML projects is crucial for businesses to fully benefit from their technology investments. MLOps ensures that machine learning models are not only developed but also deployed and maintained effectively. This helps solve common issues like deployment failures, inefficiencies, and scalability problems.

Our MLOps team helps organizations streamline their processes, improve teamwork between data scientists and engineers, and use automation to ensure high performance, reliability, and accuracy in their AI projects.

To unlock new opportunities with MLOps – contact us and book your free consultation today.